Gears 5 system requirements, settings, benchmarks, and performance analysis

Gears 5 is more demanding than Gears 4, but it can still run on a wide range of hardware.

Gears of War is back for another round and, as with the previous game, it's launching simultaneously for both Xbox and Windows, so you don't have to deal with the Windows Store since the game is also available on Steam. (Note that I'm testing the Microsoft Store version.) The arrival of Gears 5 made me realize it has been nearly three years since the last installment, and I'm certainly curious to see how much things have changed in terms of performance and graphics quality compared to Gears of War 4.

As our partner for these detailed performance analyses, MSI provided the hardware we needed to test Gears 5 on a bunch of different AMD and Nvidia GPUs, multiple CPUs, and several laptops. See below for the full details, along with our Performance Analysis 101 article. Thanks, MSI!

Hardware has changed quite a bit in three years. In late 2016, the fastest graphics card around was the GTX 1080, and on the AMD side we were looking at the R9 Fury X. Now we're looking at Nvidia's RTX cards (though the ray tracing abilities aren't used by Gears 5) and AMD's RX 5700 as the latest and greatest. Gears 4 couldn't manage a playable 60 fps at 4K back in the day, but perhaps Gears 5 will do better. Or maybe it's just that we have GPUs that are basically twice as fast (and over twice as expensive).

Before we get to the testing, a few items are worth mentioning. First, AMD is promoting Gears 5. It will be one of the first games to support AMD's new FidelityFX (Borderlands 3 being another), and AMD sent out a press release detailing a few other features. Plus, you can play Gears 5 "free" via Microsoft's Xbox Game Pass, and you get a free 3-month subscription with the purchase of an AMD RX 5700 series GPU. And last, Gears 5 appears to be for DirectX 12 only, and in many DX12 games AMD graphics cards tend to perform better relative to their Nvidia competition.

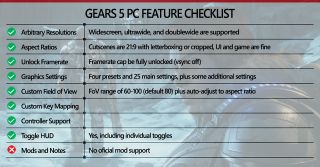

Here's a quick look at what the PC version of Gears 5 has to offer.

Gears 5 basically ticks off every bullet point except one: mods. That's been the case since the first Gears of War and I don't expect it to change any time soon. Otherwise, the PC version has pretty much everything you need.

You can play at any resolution, field of view adjustments are available, and the cutscenes are even in 21:9 by default (which means the sides either get cropped or you get letterboxing on a 16:9 display, and there's a menu option that lets you choose). And then there's the graphics settings list, which goes above and beyond anything else.

I'll cover the settings below in more detail, but Gears 5 makes sure that even people who don't know what anisotropic filtering or anti-aliasing are can easily see what they do via its preview feature. It's pretty cool and actually useful. The only real problem is potential information overload—25 different settings, plus some additional tweaks that don't really affect performance, but can change the way the game looks. Do you want standard bloom or anamorphic bloom? Do you even care?

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

It's worth noting that Gears 5 will use your desktop resolution combined with screen scaling by default. If you have a 4K monitor but want to play at 1080p for performance reasons, you're actually better off changing your desktop resolution to 1080p and then launching the game—or you can even change the desktop resolution while playing and Gears 5 won't miss a beat, adjusting to the resolution. All testing was done with the desktop resolution set to the desired rendering resolution.

Gears 5 PC system requirements

The official system requirements for Gears 5 are relatively tame, though no there's no specific mention of expected performance. Based on my test results (see below), I'd assume the minimum specs are for 1080p low at a steady 30 fps, though you might even get closer to 60 fps. The recommended specs meanwhile look like they should be good for 1080p medium at 60 fps, possibly even bumping up a few settings to high. Finally, the ideal specs should handle 1440p at ultra quality and still deliver 60 fps. Here are the system requirements:

Minimum:

- CPU: Intel 6th Gen Core i3 or AMD FX-6000

- GPU: Nvidia GeForce GTX 760/1050 or AMD Radeon R9 280/RX 560

- RAM: 6GB

- VRAM: 2GB

- Storage: 80GB free

- OS: Windows 10 May 2019 update (version 1903 or later)

- OS: Windows 7/10 64-bit for Steam version

Recommended:

- CPU: Intel 6th Gen Core i5 or AMD Ryzen 3

- GPU: Nvidia GeForce GTX 970/1660 Ti or AMD Radeon RX 570/5700

- RAM: 8GB

- VRAM: 4GB

- Storage: 80GB free

- OS: Windows 10 May 2019 update

- OS: Windows 7/10 64-bit for Steam version

Ideal:

- CPU: Intel 6th Gen Core i7 or AMD Ryzen 7

- GPU: Nvidia GeForce RTX 2080 or AMD Radeon VII

- RAM: 16GB

- VRAM: 8GB

- Storage: SSD plus 100GB free

- OS: Windows 10 May 2019 update

- OS: Windows 7/10 64-bit for Steam version

The specs cover quite a range of performance and hardware, and if a 6th Gen Core i3 or FX-series CPU will suffice, you can probably get by with a 2nd Gen Core i5/i7 as well. There are also some serious discrepancies, like the fact that the GTX 970 and GTX 1660 Ti are in completely different classes, and the same goes for the RX 570 and RX 5700. The ideal specs do look pretty hefty, but based on my testing Gears 5 should be playable at 60 fps and high or ultra settings on quite a few GPUs.

Gears 5 will also work on Windows 7, provided you purchase the Steam version. The Steam page says Win7 is supported, while Microsoft says you need the latest version of Windows 10, specifically the May 2019 updated (build 1903) or later, along with DirectX 12, but the MS requirements appear to be specifically for the Microsoft Store version. MS ported most of DX12 to Windows 7, and it's nice to see them supporting the older but still popular OS with this latest release.

Gears 5 settings overview

Gears 5 almost sets a new record for the number of graphics options. There are 25 main options, with another five or so tweaks (e.g., a bit less or more sharpening than the default). That's a lot of settings to sift through, but Gears 5 wins major kudos for letting you preview how most of the settings affect the way the game looks. Not sure whether volumetric lighting should be turned up or down? Check out the preview. It also suggests how much of an impact each setting can have on performance, though the estimates are a bit vague. Each setting lists one of "none/minor/moderate/major" for the impact on your GPU, CPU, and VRAM.

What does that actually mean? I checked performance with each setting turned down to minimum using the RX 5700 and RTX 2060 and compared that to the ultra preset, which generated the above charts. That provides a more precise estimate of performance. Gears 5 includes a built-in benchmark that delivers generally consistent results, so the measured changes should be reasonably accurate (within 1-2 percent). However, I only tested with one CPU and a relatively potent graphics card with 6GB GDDR6 VRAM, so some settings may have a greater impact on lower spec hardware.

Because there are so many individual settings, I'm only going to cover the ones that cause the greatest change in performance (at least 5 percent). My baseline performance is using the ultra preset at 1080p, and then dropping each setting to the minimum value. You can refer to the above charts for the RX 5700 and RTX 2060 for the full test results, but here are the main highlights.

Changing World Texture Detail to low can improve performance by up to 10 percent, but it can also make the world look a lot blurrier. Dropping a notch to high instead of ultra can still improve performance a few percent with very little change in image quality.

Dropping Texture Filtering to 2x can reduce performance by up to 7 percent, and in motion you probably wouldn't notice the difference between 2x and 16x anisotropic filtering.

Reducing World LOD and Foliage LOD are each good for up to 5 percent more performance, but the reduction in object detail can be noticeable. I'd try to keep these at high, or at least medium, unless your hardware is really struggling.

Dynamic Shadow Quality is the single biggest impact on performance, and the low setting can boost performance by up to 26 percent, but each step down is very visible, and the low setting makes everything look flat. Try to keep this at high or medium if possible.

If your minimum framerate is set at 60, don't be surprised if you average close to 60 fps.

Turning off Ambient Occlusion can improve performance by 3-5 percent, but also makes things look a bit flat. It's less noticeable than the drop in dynamic shadow quality, however.

Setting Tessellation Quality to low can boost performance by up to 10 percent, but it removes a lot of detail from some surfaces. I'd try for at least the medium setting.

Disabling Depth of Field can improve frame rates by around 6 percent, and some people prefer leaving this off to make background areas less blurry.

Finally, turning off Tiled Resources boosted performance by up to 10 percent, but minimum fps, particularly on the first pass, took a severe hit. I'd leave it on unless you have an older GPU where it's specifically causing problems.

Everything else only changed performance by 0-3 percent, though in aggregate the gains can be larger. Still, if you're at the point where you need to start turning down the remaining settings, you're probably better off just using the low preset and maybe tweaking from there.

One important setting to point out is the minimum framerate option. All of my testing was done with this set at "none." Setting a 30, 60, or 90 fps target for minimum framerate will dynamically scale quality and perhaps resolution to attempt to maintain the target performance. If your minimum framerate is set at 60, don't be surprised if you average close to 60 fps ... but on lower end hardware, it's definitely not running the same workload.

Gears 5 graphics card benchmarks

As noted already, Gears 5 has a built-in benchmark, which makes it possible for others to compare performance with my numbers. I have updated testing results using the latest Nvidia 436.30 and AMD 19.9.2 drivers, both of which are officially game ready for Gears 5.

There's a caveat with the built-in benchmark: often (especially after the first run), the first 8 seconds or so of the benchmark sequence will have an artificial fps cap. I don't know the root cause, but I had to wait about 10 seconds and then restart the benchmark to get correct results on many of the tests. If you see the fps jump from 30-60 to some substantially higher number in the first 10 seconds, pause and restart the benchmark.

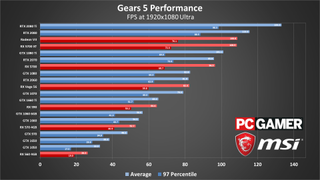

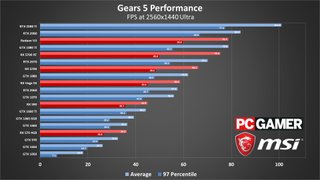

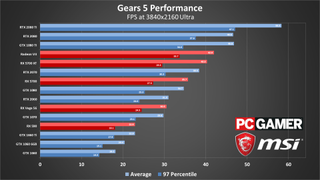

For 1080p I'm looking at all four presets: low, medium, high, and ultra. I'm also testing 1440p and 4K using the ultra preset. Frametime data was captured using the FrameView utility from Nvidia, which is a slightly more user friendly take on the open source PresentMon.

All GPUs were provided by our partner MSI and are listed in the above real-time pricing tables as well as below in the tested hardware boxout. I have omitted many GPUs that perform similarly to one of the tested cards—the RTX 2080 Super is only slightly faster than the original RTX 2080, the RTX 2070 Super is slightly slower than the 2080, and the RTX 2060 Super is only slightly slower than the original 2070.

For previous generation hardware, I've limited testing to a few of the most common/popular models. You should be able to interpolate results (e.g., the GTX 1080 lands about midway between the 1070 and 1080 Ti, and the 1070 Ti is midway between the 1080 and 1070).

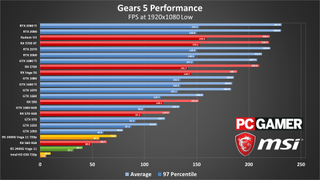

Starting with the 1080p low preset, the minimum spec GTX 1050 and RX 570 manage 60 fps, though just barely in the case of the 560. I don't have any hardware older than that, but I expect cards like the GTX 760 should at least break 30 fps without trouble. The AMD and Nvidia GPUs I've tested generally perform as expected, so the 2060 and 5700 are a close match, and the 1060 and 570 are also close together.

Elsewhere, the fastest GPUs appear to hit a CPU bottleneck of around 230 fps. That's not enough for a 240Hz display, though G-Sync/FreeSync would of course help out. The more common 144Hz displays would be good at least. Everything from the RX 5700 through the 2080 Ti lands in the 210-230 fps range. Considering the 2080 Ti is roughly twice the theoretical performance of the 2060, their similar performance proves we're hitting a CPU or some other bottleneck.

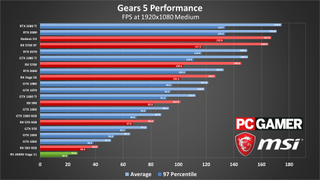

1080p medium is quite the step up in requirements. The budget and midrange GPUs see performance drop by 35-40 percent, though the fastest cards show a smaller dip due to CPU limitations. The minimum GPU for 60 fps is the GTX 1650, and breaking 30 fps is still possible even on the minimum spec GTX 1050 and RX 560. Meanwhile, the RX 5700 and above average 144 fps or more for those with a higher refresh rate display, though minimums are well below that mark.

The AMD cards generally do a bit better than their Nvidia counterparts, though in testing I noticed minimum fps tended to fluctuate a lot more between runs than on the Nvidia cards. The Radeon VII also does very well here, mostly thanks to high minimum framerates. Lower down the chart, the RX 590 also jumps ahead of the 1660 this time.

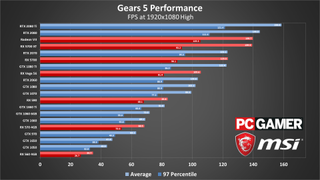

Depending on your GPU, going from the medium to high preset typically drops performance around 18 percent, but the 6GB and 4GB Nvidia Turing GPUs can drop by as much as 30 percent. That appears to be more of a DX12 optimization problem, however, as the 970 and 1050 only drop about 15-18 percent.

Besides the top GPUs, which are still at least somewhat CPU limited, most of the mid-range and slower GPUs are about half their 1080p low performance levels. The GTX 970 and above still average more than 60fps, and the RX 560 still breaks 30fps, so a mix of medium to high settings is still playable on most GPUs made in the past several years.

In terms of overall image quality, the high preset is the sweet spot. The difference between medium and high is relatively visible, while it's much more difficult to spot the improvements when going from high to ultra.

It appears the developers spent more time optimizing for the Pascal architecture than the new Turing architecture.

1080p high is also where things start to get a bit weird. The GTX 1060 6GB performed better than the GTX 1660 in my testing. That makes no sense from a hardware perspective. The 1060 has 1280 cores running at somewhere around 1850MHz. The GTX 1660 has 1408 cores running at around 1850-1900MHz. The 1660 also has hardware that supports concurrent INT and FP calculations. What gives?

This is the difficulty with low-level APIs like DX12. It appears, based on other GPUs as well, that Microsoft / The Coalition has spent more time optimizing for the Pascal architecture (GTX 10-series) than the new Turing architecture (16-series and RTX cards). The result is lower than expected performance on the Turing cards, at least those with only 4-6GB VRAM, but that could change with game and/or driver updates.

1080p ultra drops performance another 20-25 percent on most cards, though the 2080 Ti still bumps into CPU limits and only drops 16 percent. The 1060 6GB and 1660 come up just a bit short of 60 fps, while the RX 590 generally gets there but with occasional dips below 60 fps. Meanwhile, the GTX 1050 still manages to break 30 fps, which means it's technically playable, though minimum fps is clearly a concern with the 2GB card.

Gears of War 5 at 1440p ultra is too much for the budget cards, and I didn't bother testing the slowest GPUs. For 60 fps, you'll need at least an RX 5700, RTX 2070, or GTX 1080. If you're just looking for 30 fps or more, however, you can get by with even a GTX 970. Higher refresh rate displays won't do much good, unfortunately, unless you want to drop the graphics setting. Even a 2080 Ti only averages a bit more than 100 fps.

What about 4K ultra? The RTX 2080 Ti nearly gets to 60 fps, and at the high preset it can handle 4K quite well. All the other GPUs fall short of that mark, but with a few tweaks to the settings the RX 5700 and GTX 1080 above should still be okay.

Again, please note that the minimum framerate was set to "none" for these tests. If I set the minimum fps target to 60, most of the GPUs will show close to 60 fps, even at 4K ultra. That's because the game will dynamically scale quality and resolution to hit the desired level of performance. It's not a bad idea to set this to 60, but in terms of benchmarks, it definitely changes the workload and doesn't allow for apples-to-apples comparisons.

Gears 5 CPU benchmarks

I've tested eight different CPUs, including an overclocked i7-8700K. At stock clocks, the 8700K ends up being similar to the Ryzen 7 3700X and Ryzen 9 3900X, while the i9-9900K continues to claim top honors. Previous 2nd gen Ryzen hardware doesn't fare nearly so well.

If you happen to be running an RTX 2080 Ti, a Core i9-9900K can outperform the fastest third gen Ryzen CPU by up to 13 percent, and it beats the i3-8100 and Ryzen 5 2600 by 60 percent at 1080p low. The difference between the CPUs starts to narrow and image quality and resolution are increased, until everything ends up basically tied at 4K ultra.

That 4K ultra chart is basically what you'd get with a midrange level GPU as well, only at 1080p medium and above. Basically, slower graphics cards make the differences between CPUs far less pronounced. Even the slowest of the CPUs I've tested can easily break 60 fps, and Gears 5 should run well on just about any midrange or higher CPU from the past five to ten years.

Gears 5 laptop benchmarks

Moving over to the gaming notebooks, all three laptops I tested easily break 60 fps at 1080p, regardless of setting, though minimums can dip below 60 at ultra on the 2060 and 2070 Max-Q. If you're sporting a 120Hz or 144Hz display (like these MSI models), 1080p low will also let you make full use of the refresh rate. For higher quality settings, you'd be better off with a G-Sync 120/144Hz display, to avoid tearing.

Final thoughts

Desktop PC / motherboards / Notebooks

MSI MEG Z390 Godlike

MSI Z370 Gaming Pro Carbon AC

MSI MEG X570 Godlike

MSI X470 Gaming M7 AC

MSI Trident X 9SD-021US

MSI GE75 Raider 85G

MSI GS75 Stealth 203

MSI GL63 8SE-209

Nvidia GPUs

MSI RTX 2080 Ti Duke 11G OC

MSI RTX 2080 Duke 8G OC

MSI RTX 2070 Gaming Z 8G

MSI RTX 2060 Gaming Z 8G

MSI GTX 1660 Ti Gaming X 6G

MSI GTX 1660 Gaming X 6G

MSI GTX 1650 Gaming X 4G

AMD GPUs

MSI Radeon VII Gaming 16G

MSI Radeon RX 5700 XT

MSI Radeon RX 5700

MSI RX Vega 64 Air Boost 8G

MSI RX Vega 56 Air Boost 8G

MSI RX 590 Armor 8G OC

MSI RX 580 Gaming X 8G

MSI RX 570 Gaming X 4G

MSI RX 560 4G Aero ITX

Gears 5 isn't the most demanding of games, and at lower settings it can run well even on budget hardware. Relative to the previous game in the series, Gears 5 appears to have reduced framerates by around 25 percent. I'm not sure how that will impact consoles, but on PC at least there are plenty of options to tweak to improve performance.

I did some initial testing with an early access release of Gears 5, but I've since retested with the latest drivers and the final public release of the game. I didn't see any noteworthy changes in performance, though a few cards did drop slightly (mostly Nvidia's RTX line is 2-3 percent slower at higher resolutions). Things could still improve over time and it's something I'll keep an eye on, but I haven't encountered any major issues running Gears 5 so far.

As with the previous game, Microsoft and The Coalition have done a good job making Gears 5 run well across a wide range of hardware. Even in these early days, the DirectX 12 code looks to be pretty well optimized ... at least for the older GPUs. Nvidia's Turing GPUs do indicate there's room for improvement, however, as cards like the GTX 1660 shouldn't perform worse than the GTX 1060.

Whether you have a Windows 7 PC and want the game on Steam, or you're running Windows 10 and are fine with the Microsoft Store, Gears 5 runs on a wide variety of hardware. Well, except Intel integrated graphics, which basically choked even at 720p. Bring along any budget GPU or better, however, and you should be good to go.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

Most Popular