AMD's top next-gen GPU may not be a multi-chiplet compute monster but that's better for everyone

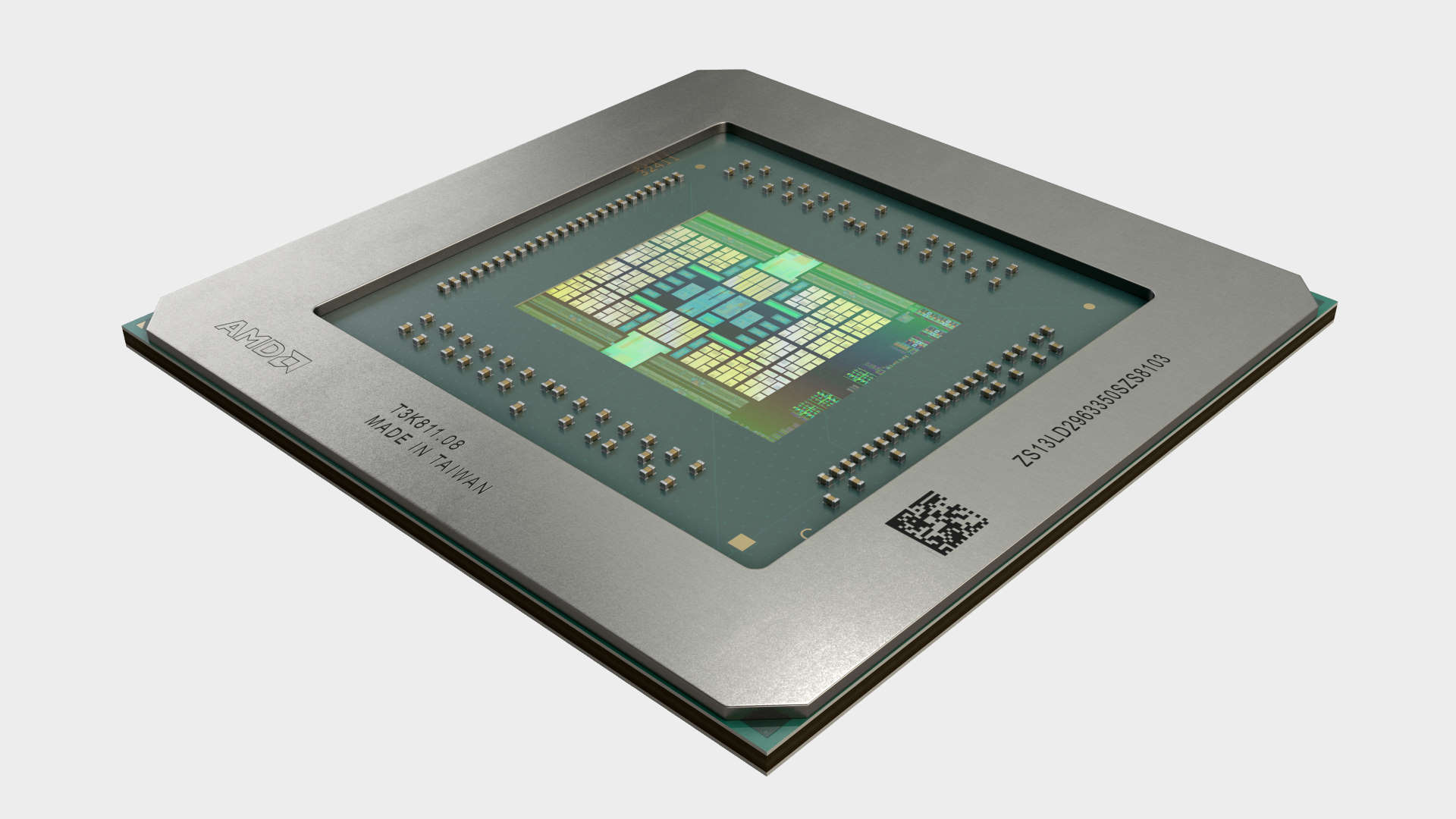

Once rumoured to be a dual GPU chiplet design, it now seems as though Navi 31 is going to have a single, powerful compute die at its heart.

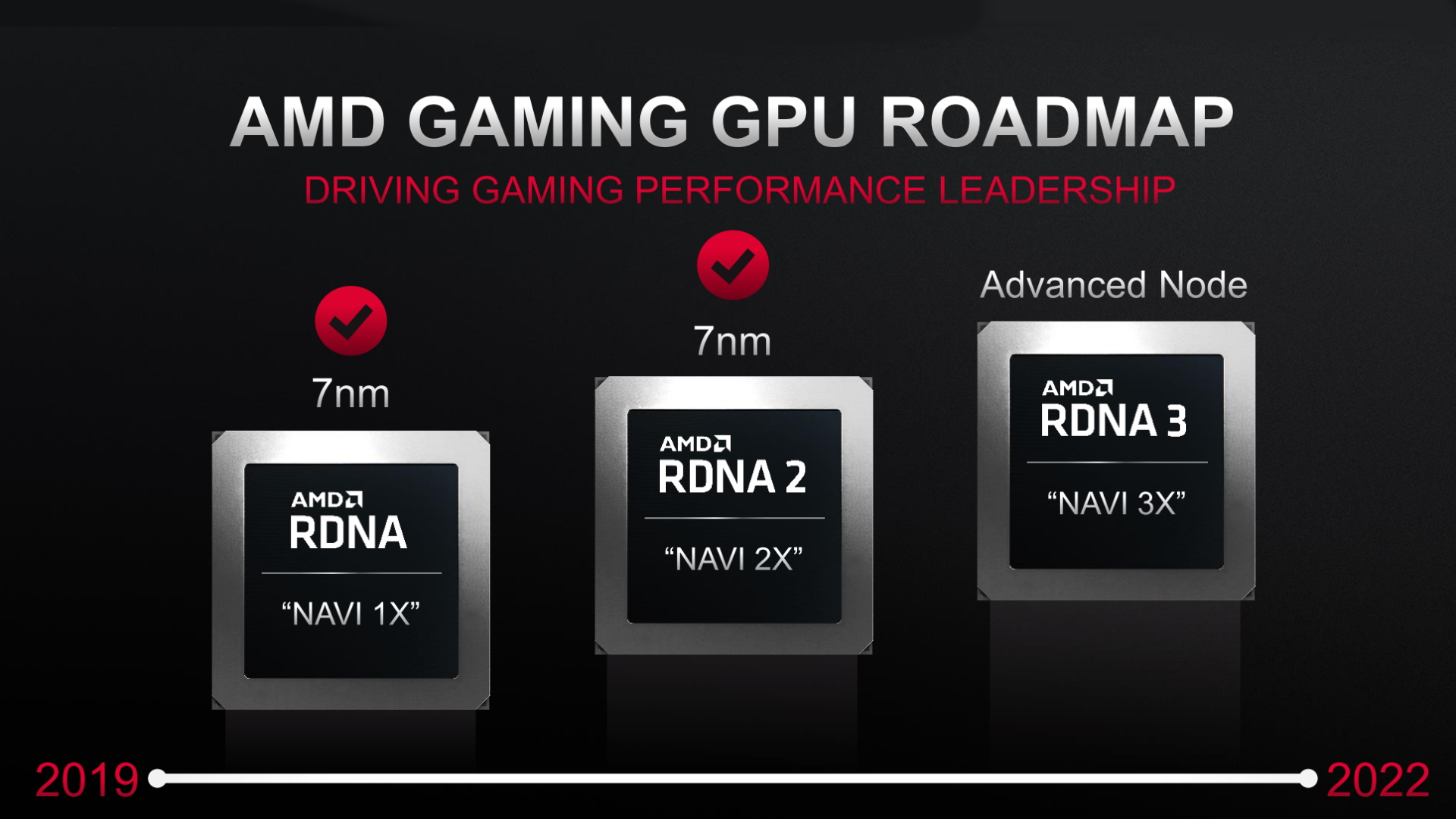

AMD's top RDNA 3 GPU is no longer thought to be a multi-chip GPU, at least not from a graphics compute perspective. The expectation had been that AMD's top GPU of the next generation, its Navi 31 silicon, would be the first to bring the chiplet design of its latest Ryzen CPUs to its graphics cards.

The new rumour that it's not is, honestly, a bit of a relief.

As AMD's next-gen GPUs get closer to their expected October launch date, and start to ship out to its partners, we'll get ever closer to the leak hype event horizon. That place where fact and fiction start to merge and it all just becomes a frenzy of fake numbers sprinkled with a little light truth.

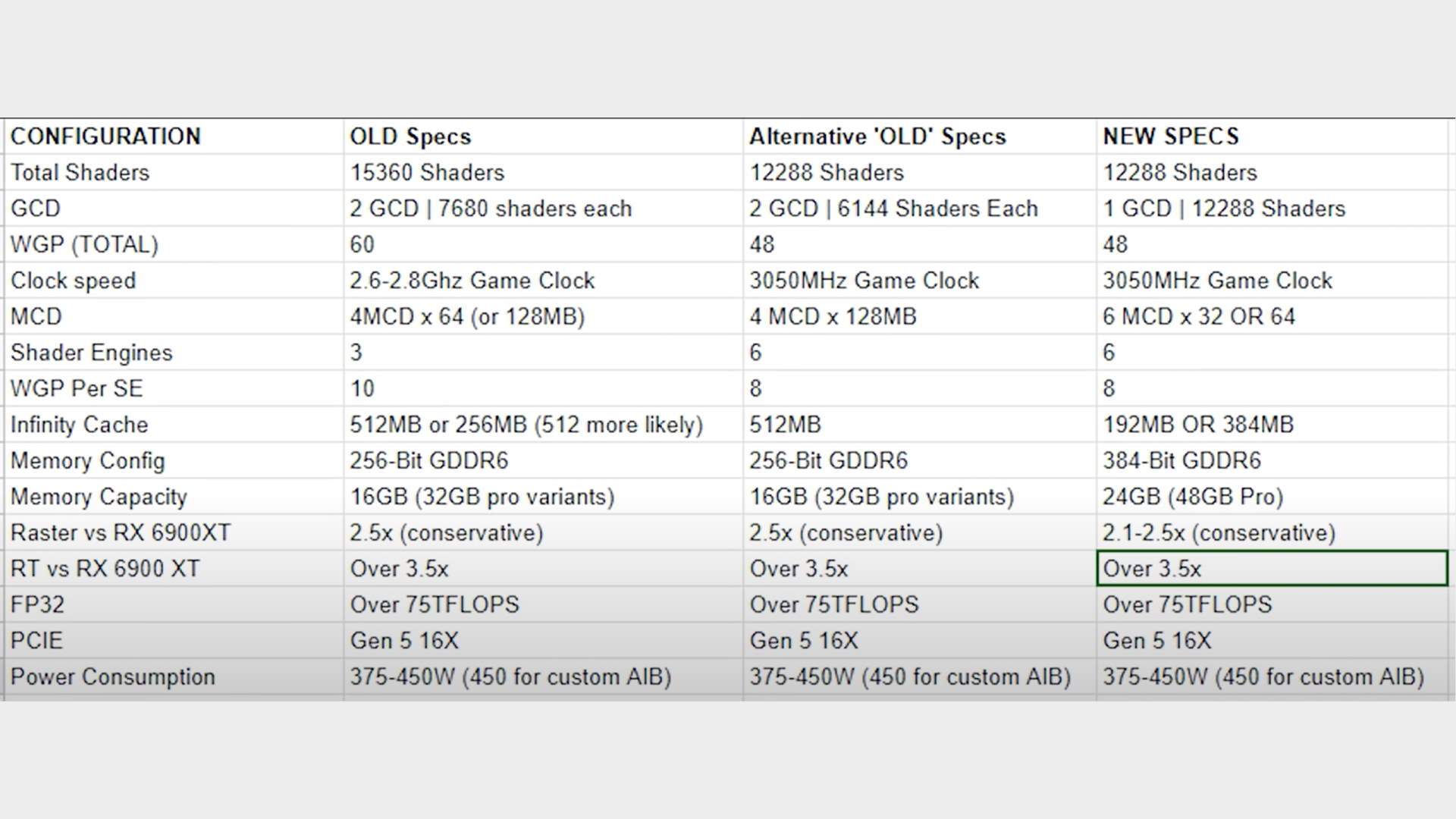

But that doesn't mean things are in any way settled right now. The Twitter x YouTube leak machinery is always grinding away, and AMD's flagship Navi 31 GPU has been given so many theoretical and fanciful specifications that it's hard to keep track of where the general consensus lies right now.

Where once it was a 92 TFLOP beast with some 15,360 shaders, arrayed across a pair of graphics compute dies (GCDs), those specs have already been toned down to 72 TFLOPs and 12,288 shaders. Now we're hearing rumours that all the noise about a dual graphics chiplet design were erroneous, and the reality of the multi-chip design is more about the floating cache than extra compute chips.

The latest video from Red Gaming Tech, seemingly corroborated across the Twitter leakers, is suggesting that the entire 12,288 shader count is going to be housed on a single 5nm GCD, with a total of six 6nm multi-cache dies (MCDs) arrayed around it, or possible on top of it.

I would honestly have loved it if AMD had managed to create a GPU compute chiplet that could live in a single package, alongside other GPU compute chiplets, and be completely invisible to the system. But, for a gaming graphics card, that's a tall order. For data centre machines, and systems running entirely compute-based workloads—such as in render farms—doubling up a GPU and running tasks across many different chips works. When you have different bits of silicon either rendering different frames or different parts of a frame in-game, well, that's a whole other ask.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

And, so far, that has been ultimately beyond our GPU tech overlords. We once had SLI and CrossFire, but it was hard for developers to implement the technology to great effect, so even when they did manage to get a game to run faster rendered across multiple GPUs, the scaling was anything but linear.

You'd spend twice as much buying two graphics cards, to get maybe 30–40% higher frame rates. In certain games. Sometimes more, sometimes less. It was a lottery, a lot of hard work for devs, and ultimately has been entirely abandoned by the industry.

The holy grail is to make a multi-GPU system or chip invisible, so your OS and applications running on it see it as one graphics card.

Best CPU for gaming: The top chips from Intel and AMD

Best gaming motherboard: The right boards

Best graphics card: Your perfect pixel-pusher awaits

Best SSD for gaming: Get into the game ahead of the rest

That was the hope when we first heard rumours that Navi 31 was going to be an MCM GPU, backed up by leaks and job listings. But I'm not going to say that hope wasn't tinged with some trepidation, too.

At some point, someone is going to do it, and we had thought that time was now, with AMD channelling its Zen 2 skills into a chiplet-based GPU. But we figured it was jumping first, ready to take the inevitable hit of adopting a new technology, and whatever unexpected latency issues crop up with different games and myriad gaming PC conflicts. All the while hoping that just chucking a whole load of shaders into the MCM mix would overcome any bottlenecks.

But with the multi-chip design now seemingly being purely about the cache dies, potentially acting as memory controllers themselves, that's going to make it far more straightforward from a system perspective and limit an unforeseen funkiness that might occur.

And is still going to make for an incredibly powerful AMD graphics card.

Nvidia's new Ada Lovelace GPUs are going to have to watch out, because this generation's going to be a doozy. And we might actually be able to buy them this time around, though probably still at exorbitant prices.

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.