AMD’s Polaris Shoots for the Stars

Polaris Features and Overview

All of the technology that goes into modern processors is certainly exciting, but in the end we come back to where wheels touch pavement. Having a hugely powerful engine that results in a burnout doesn’t do you much good in a race, just as having the best tires in the world won’t do you much good if you have a weak-sauce engine and transmission. The name of the game is balance: You have to have an architecture that’s balanced between performance, power requirements, and memory bandwidth. For AMD and RTG, that architecture is Polaris.

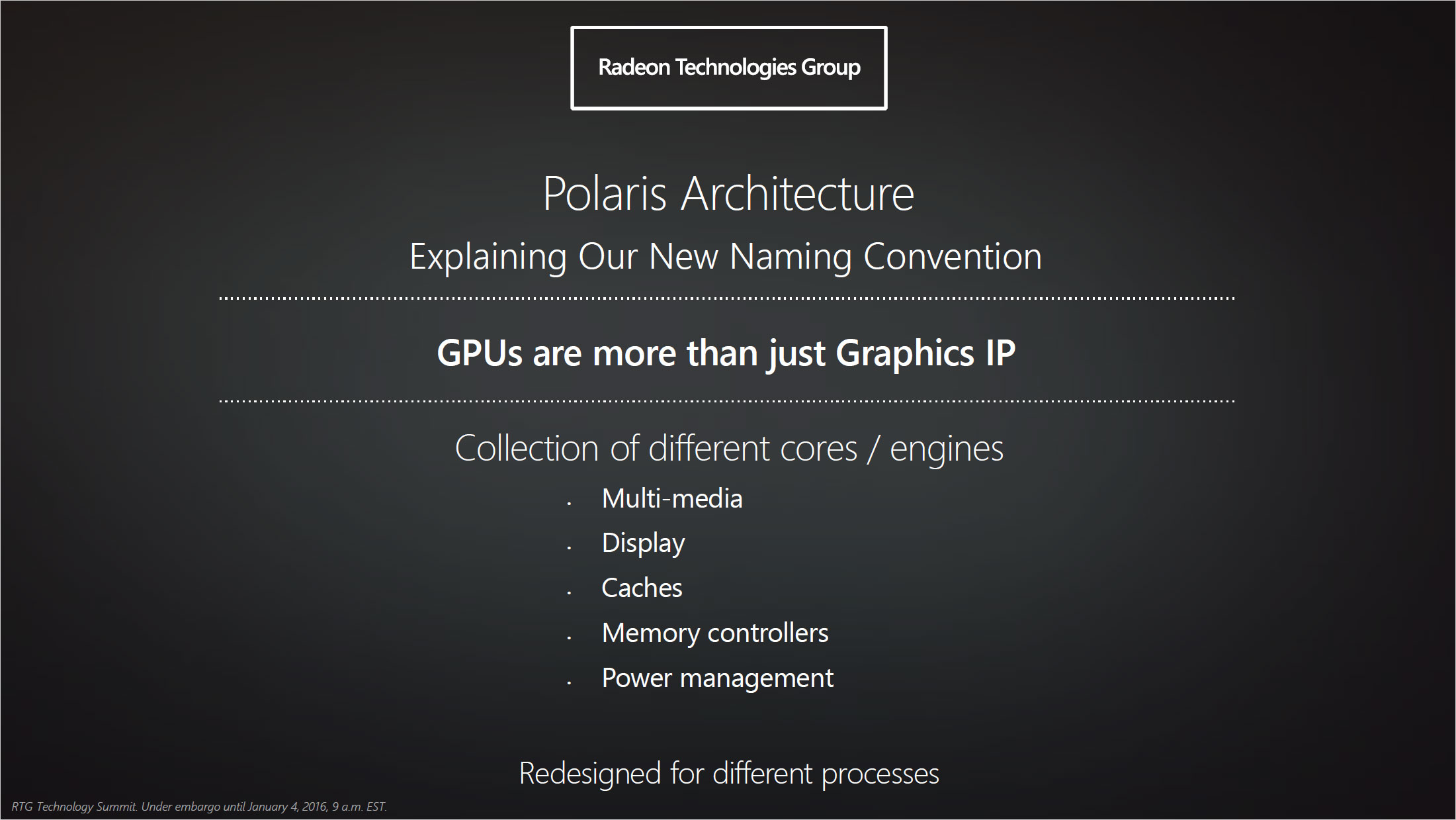

Fundamentally, Polaris is still a continuation of the GCN architecture. We don’t know if that means we’ll still have 128 cores per SM/Compute Unit, but it seems likely. One thing that Polaris will do is to unify all the Polaris GPUs under a single umbrella, giving us a unified set of features and hardware.

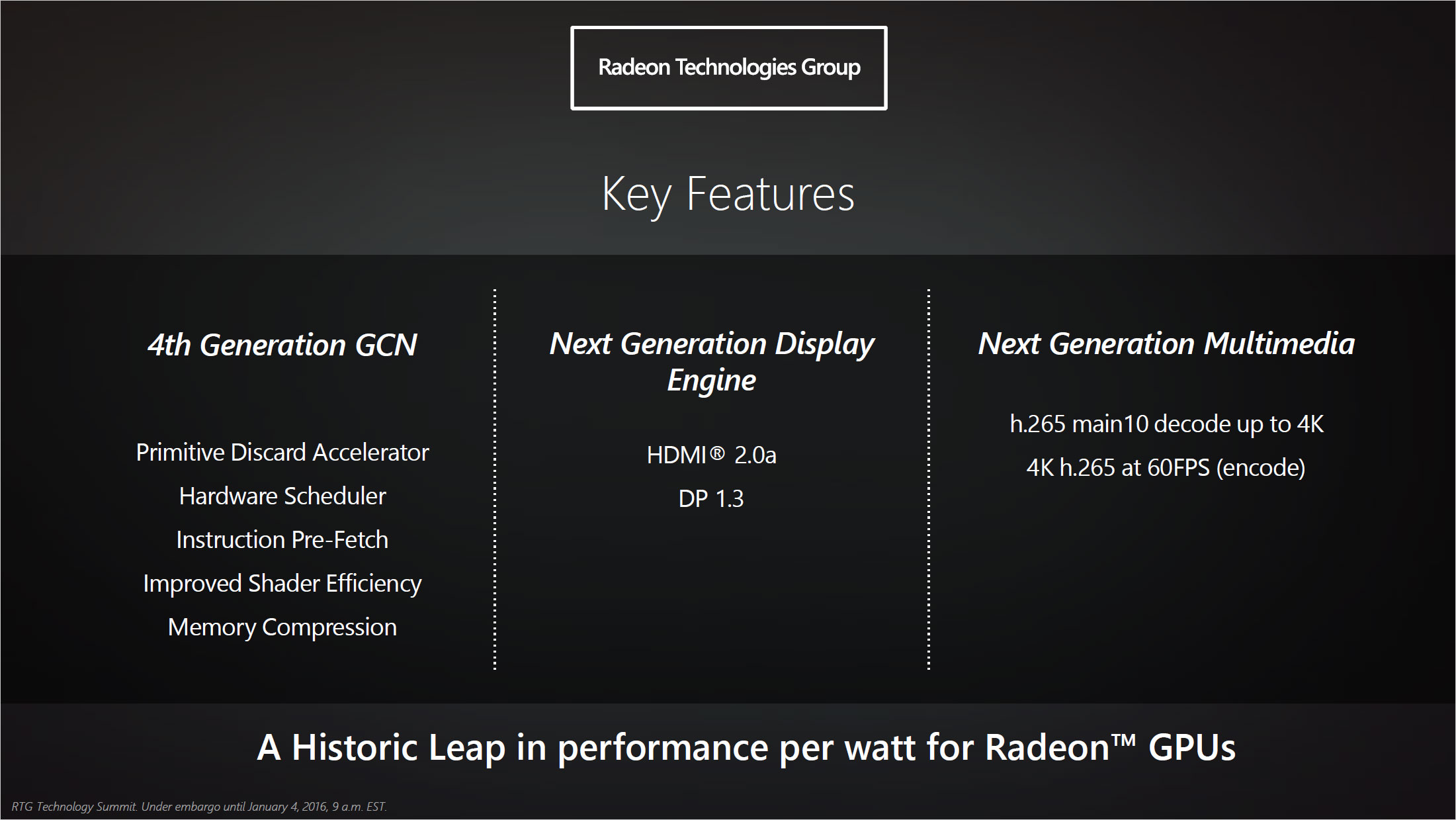

We’re calling the new architecture GCN4, mostly to give us a clean break from the existing GCN1.0/1.1/1.2a/1.2b hardware. Most of those names are “unofficial,” but they were created due to AMD changing the features in succeeding generations of GCN hardware. Ultimately, that lead to “GCN1.2” designs in Tonga and Fiji that weren’t quite the same—Fiji has an updated video encoder/decoder block, for example. All Polaris GPUs meanwhile should inherit the same core features, detailed above.

As far as new items go, we have very little to go on right now. There’s a new primitive discard accelerator, which basically entails rejecting polygons earlier so the GPU doesn’t waste effort rendering what ultimately won’t appear on the screen. There’s also a new memory compression algorithm, refining what was first introduced in Tonga/Fiji. HDMI 2.0a and DP 1.3 will all be present, and H.265 Main10 decode of 4K and 4Kp60 encode will also be present; both of these tie into the earlier presentation on display technologies. And then there are the scheduler, pre-fetch, and shader efficiency bullet points.

It’s not clear how much things are changing, but much of the low-hanging fruit has been plucked in the world of GPUs, so these are likely refinements similar to the gains we see in each generation of CPUs. We asked RTG about the gains we can expect in performance due to the architectural changes as opposed to performance improvements that come from the move to a new FinFET process; it appears FinFET will do more to improve performance than the architectural changes.

This is both good and bad news. The good news is that it means all of the existing optimizations for drivers and the like should work well with GCN4 parts. And let’s be clear, GCN has been a good architecture for AMD, generally keeping them competitive with Nvidia. The problem is that ever since Nvidia launched Maxwell in the fall of 2014, they’ve had a performance and efficiency advantage. The bad news is that if GCN4 isn’t much more than a shrink and minor tweaks to GCN, we can expect Nvidia to do at least that much with Pascal as well, and if Pascal and Polaris continue the current trend, AMD will end up once again going after the value proposition while Nvidia hangs on to the crown. But we won’t know for sure until the new products actually launch.

Showcase Showdown

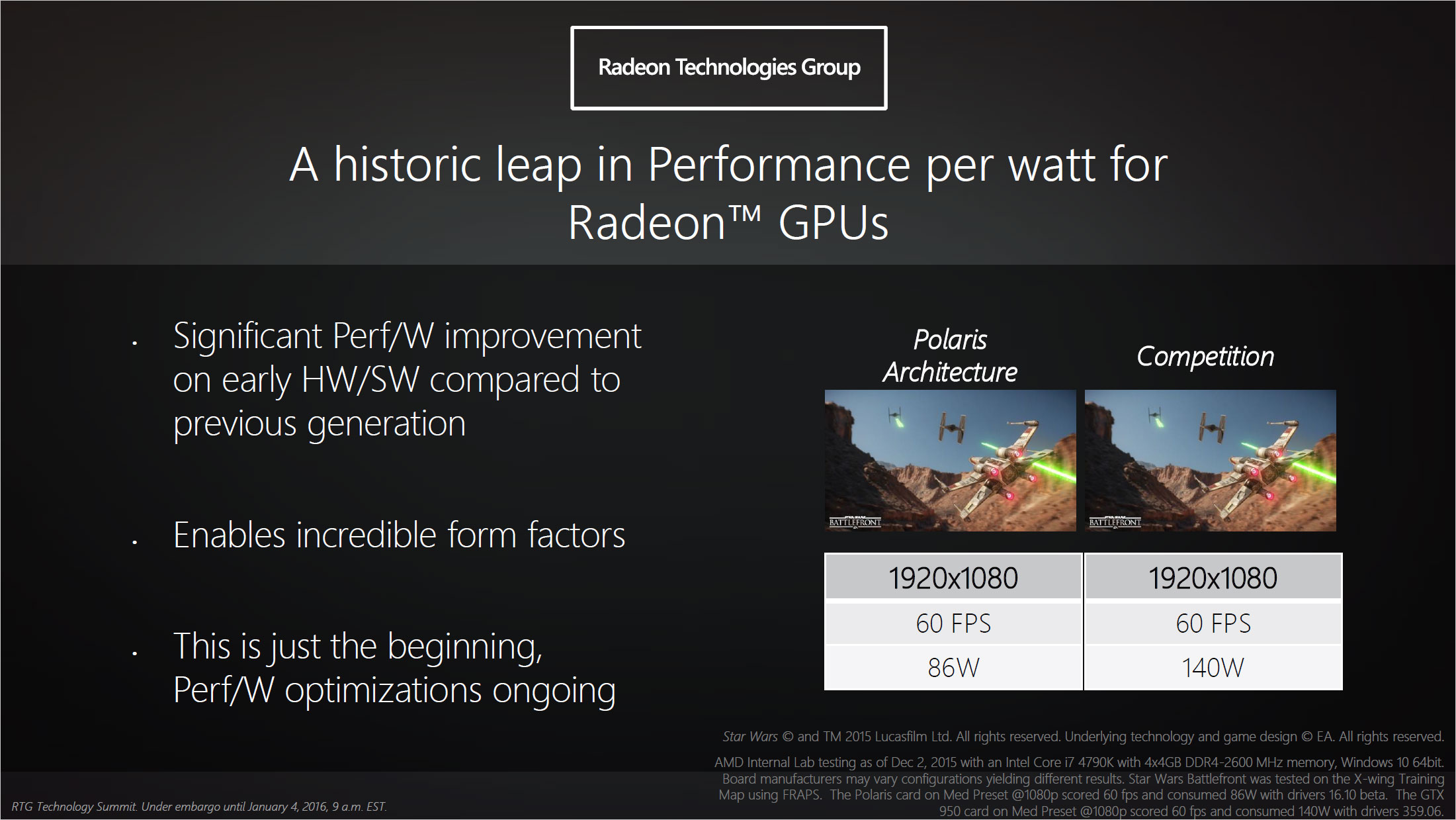

We’ll wrap up with a quick discussion of the hardware demo that AMD showed. Unfortunately, we weren’t allowed to take pictures or actually go hands-on with the hardware, and it was a very controlled demo. In it, AMD had two systems, using i7-4790K processors—yeah, that’s how bad AMD’s CPUs need an update; they couldn’t even bring themselves to use an AMD CPU or APU. One system had a GTX 950 GPU and the other had the unnamed Polaris GPU. Both systems were running Star Wars: Battlefront at 1080p Medium settings. V-Sync was enabled, and the two systems were generally hitting 60FPS, matching the refresh rate of the monitor.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

While performance in this test scenario was basically “equal”, the point was to show an improvement in efficiency. Measuring total system power (so we’re looking at probably 40-50W for the CPU, motherboard, RAM, etc.), the Nvidia-based system was drawing 140-150W during the test sequence and the AMD-based system was only using 85-90W.

What’s particularly impressive here is that we’ve built plenty of systems with the i7-4790K since it launched. Most of those systems idle at around 45-50W. The GTX 950 is a 100W TDP part, and with V-Sync it was probably using 60-75W with the remainder going to the rest of the system. To see a system playing a game at decent settings and relatively high performance while only drawing 86W is certainly impressive, as it means the GPU is probably using at most 35W, and possibly as little as 20W.

RTG discussed notebooks more than desktop chips at the summit, and if they can get GTX 950 levels of performance into a 25W part, laptop OEMs will definitely take notice. We haven’t seen too many AMD GPUs in laptops lately—outside of the upgraded MacBook Retina 15 with an R9 M370X, naturally—and we’d love to see more competition in the gaming notebook market. Come June, it looks like AMD could provide exactly that. Let’s just hope we see some higher performance parts as well, as Dream Machine 2016 looms ever nearer….

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.