Our Verdict

AMD's fastest graphics card ever, but we wanted it last year.

For

- High performance

- Forward-looking architecture

- Runs quiet at stock

Against

- Requires a lot more power than the competition

- DX11 drivers not as tuned as Nvidia's

PC Gamer's got your back

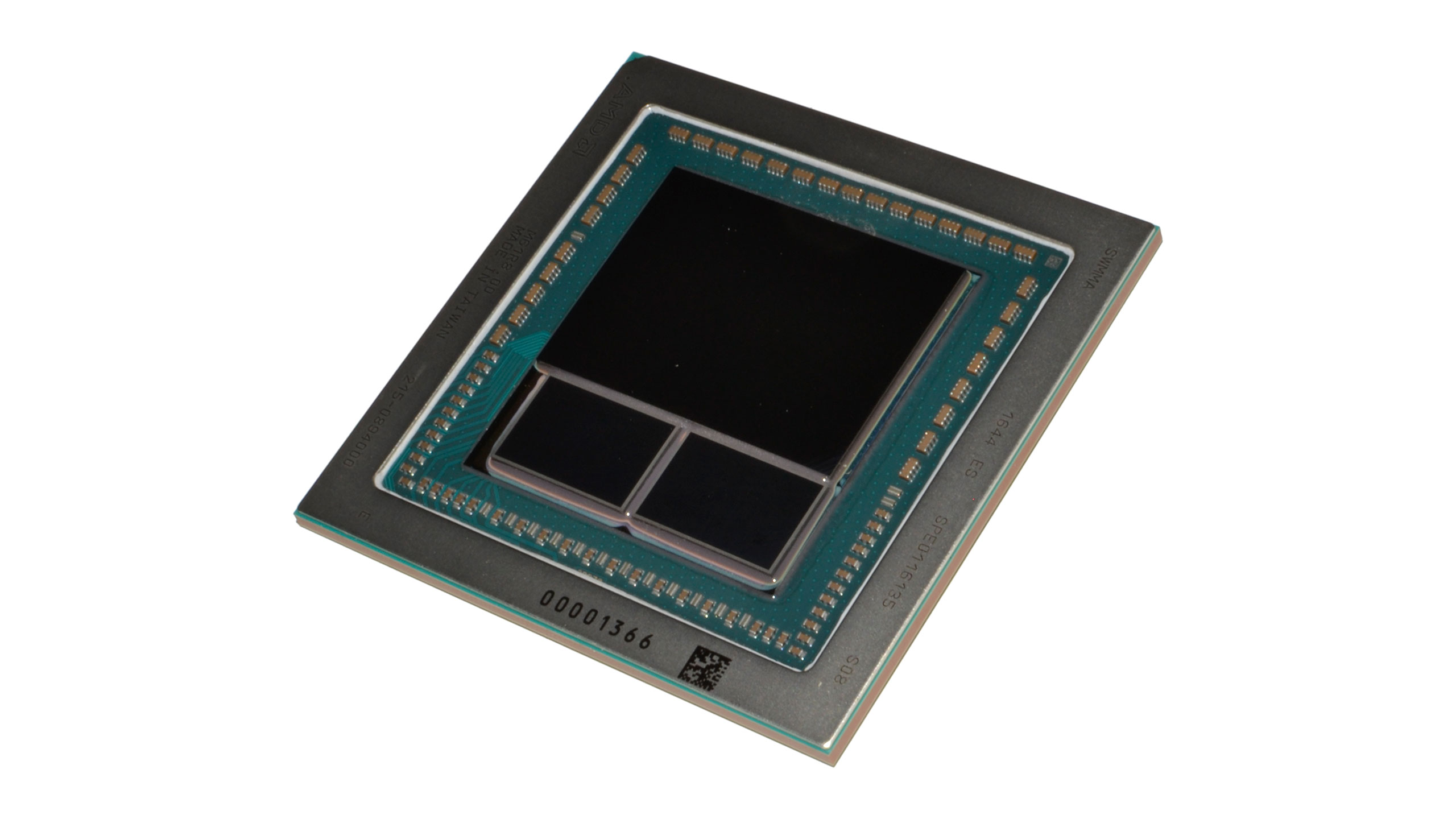

Earlier this week, I posted our initial review of AMD's RX Vega 56 and Vega 64, showing much of what the new cards have to offer. Due to time constraints (the cards arrived Friday morning, for a Monday unveiling), I couldn't complete all the testing I wanted. Now with a few more days with the Vega 64, I'm ready to issue a final verdict. If you're looking for more details on the underlying architecture and changes made with Vega, you can read our Vega deep dive and Vega preview, but the focus here will be specifically on the RX Vega 64 air-cooled version, how it performs, overclocking/underclocking, and more.

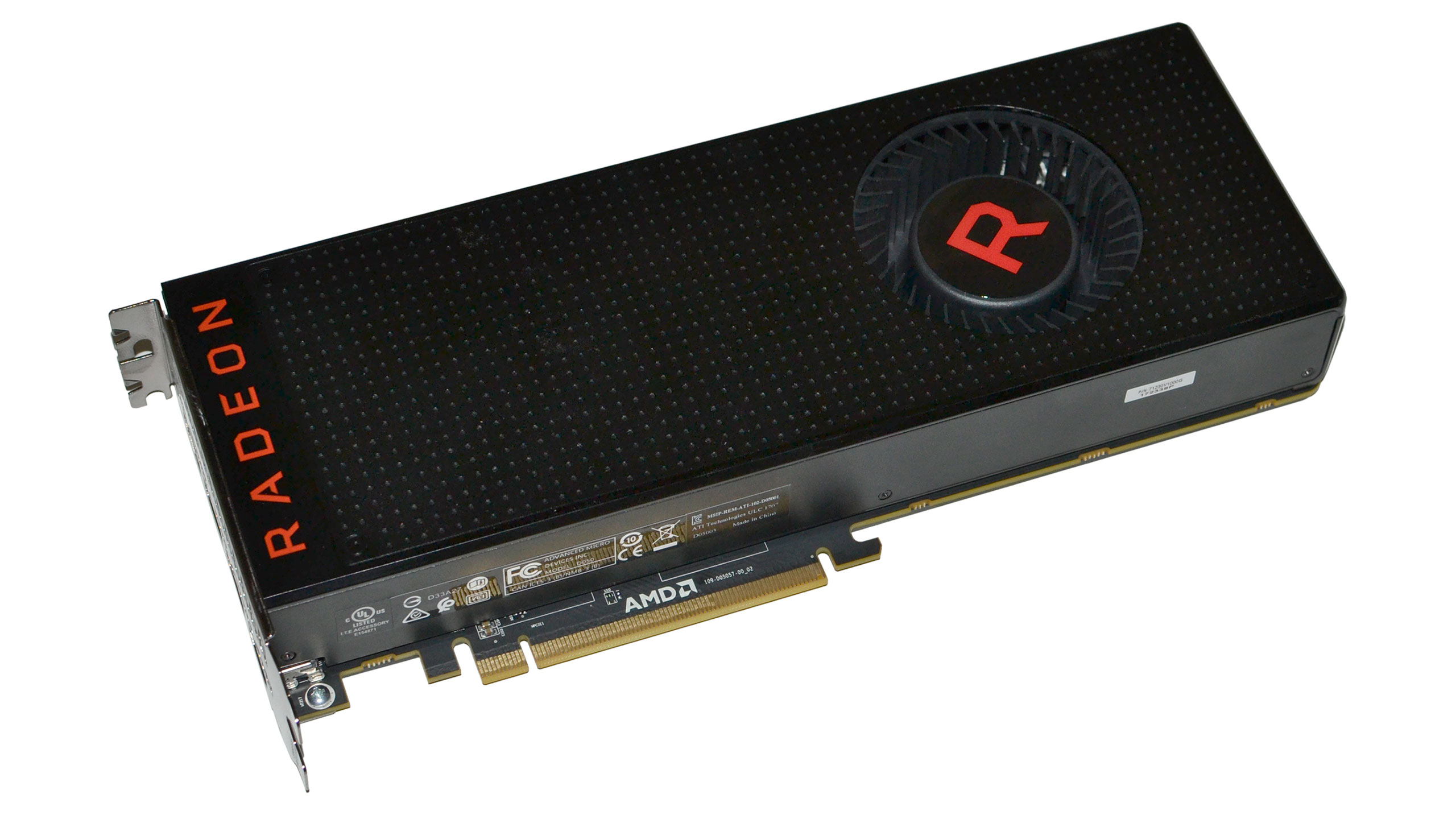

The RX Vega 64 comes in three flavors from AMD: the reference air-cooled card I'm reviewing, a limited edition model with a shiny blue brushed aluminum shroud that should otherwise perform the same, and the liquid-cooled variant that's currently only available via the Aqua Pack. All three cards clock the HBM2 memory at the same 945MHz base clock, but the liquid-cooled model increases the base clockspeed by 12.8 percent, and the boost clock by 8.5 percent. At most, then, the liquid card should be around 13 percent faster, but it might be more (or less) than that depending on whether you're willing to increase the default fan speeds.

My benchmark suite and testbed remain the same as in previous GPU reviews, a high-end X99 build with a healthy overclock on the CPU. That increases power use quite a bit, but it ensures the CPU doesn't hold back the fastest graphics cards. There are a couple of additional notes however: one is that AMD has a slightly updated driver build that's designed to work better with WattMan and overclocking. There's also a new driver focused on improving cryptocurrency mining performance, called the rather unambiguous Radeon Software Crimson ReLive Edition Beta for Blockchain Compute. Uh oh.

I know what you're thinking: after my opinion piece saying miners aren't to blame for Radeon Vega shortages (they're not, since there were never going to be enough cards available at launch), this could spell the doom of Vega as a gaming GPU. I've got a few additional thoughts and test results for mining with Vega 64 below. As it stands, Vega is still a very power-hungry card at stock, and while mining performance may have improved (more on this below), there are still better cards for mining from a pure profitability perspective.

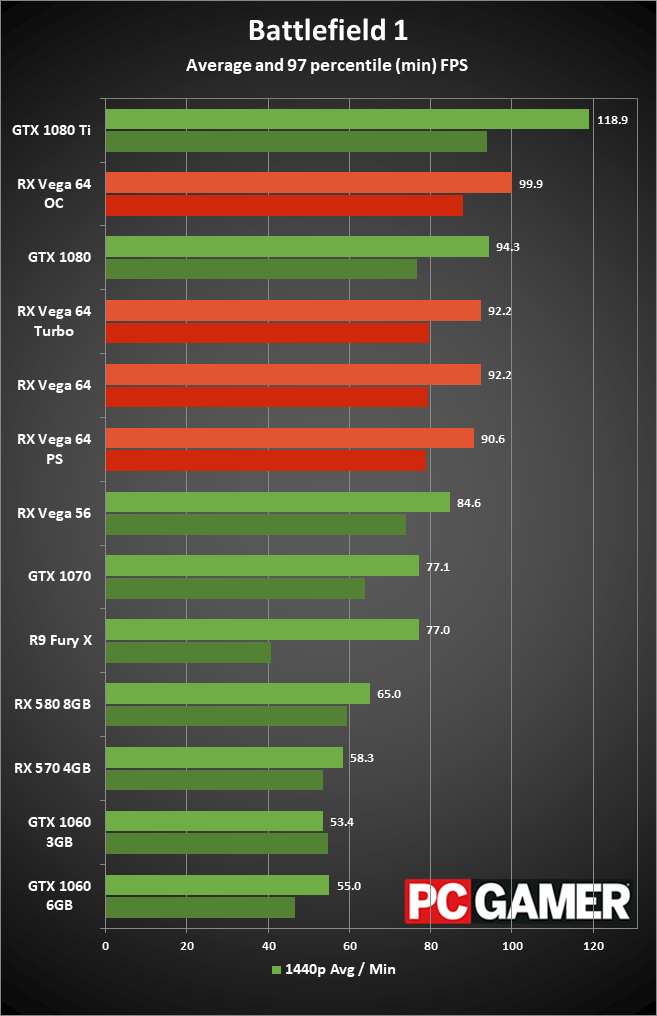

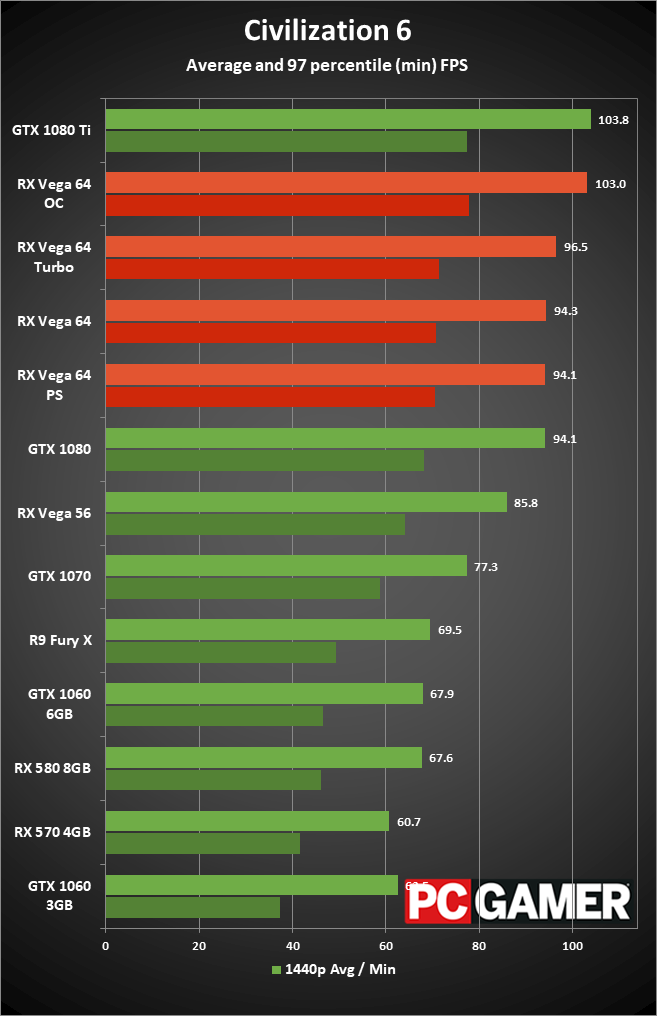

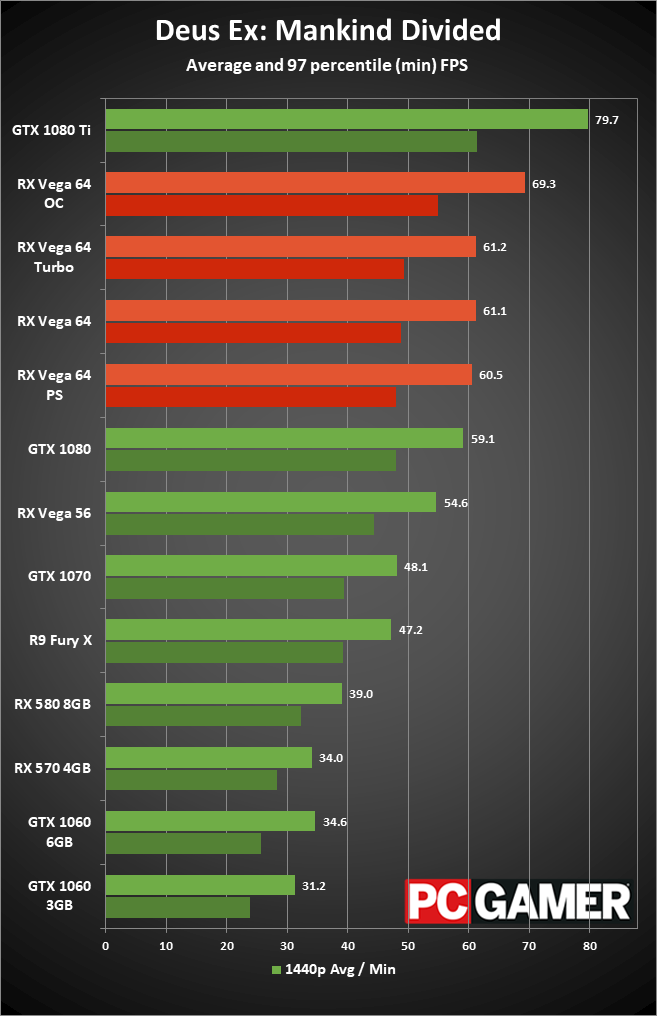

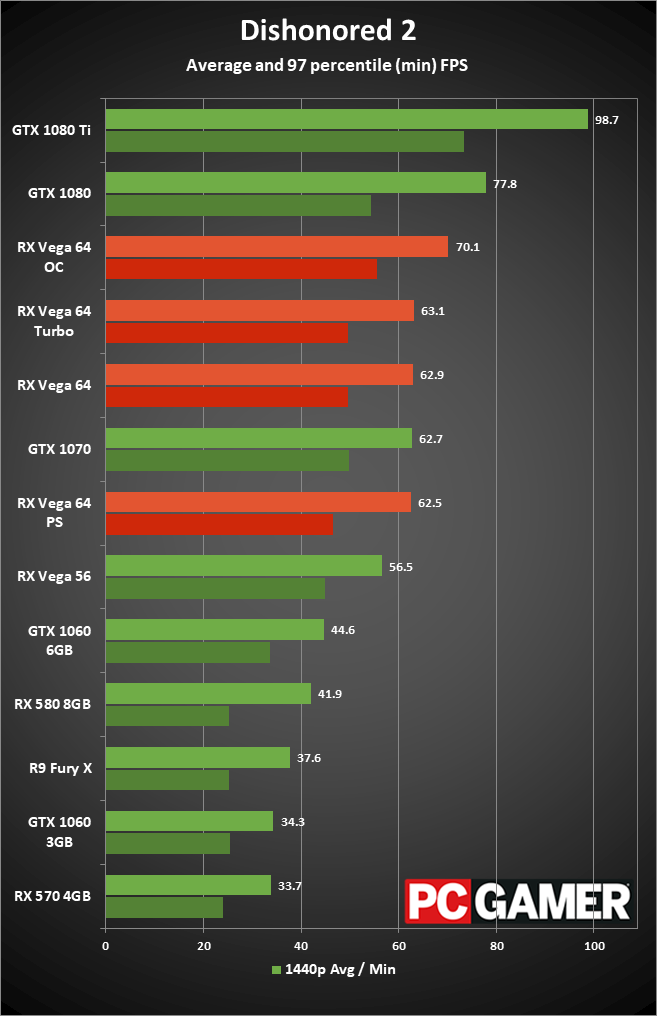

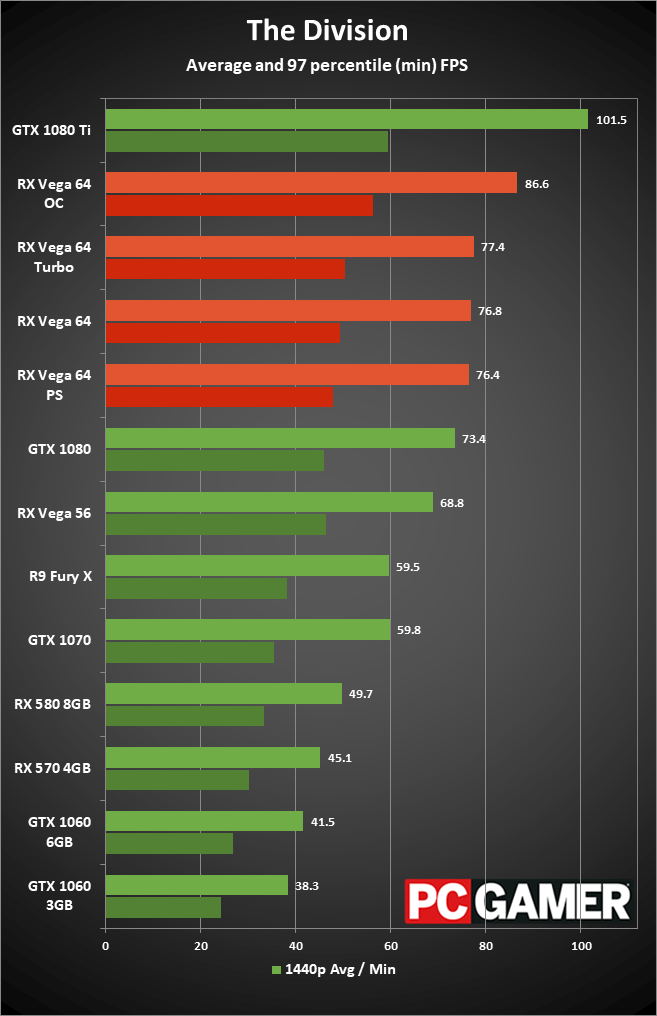

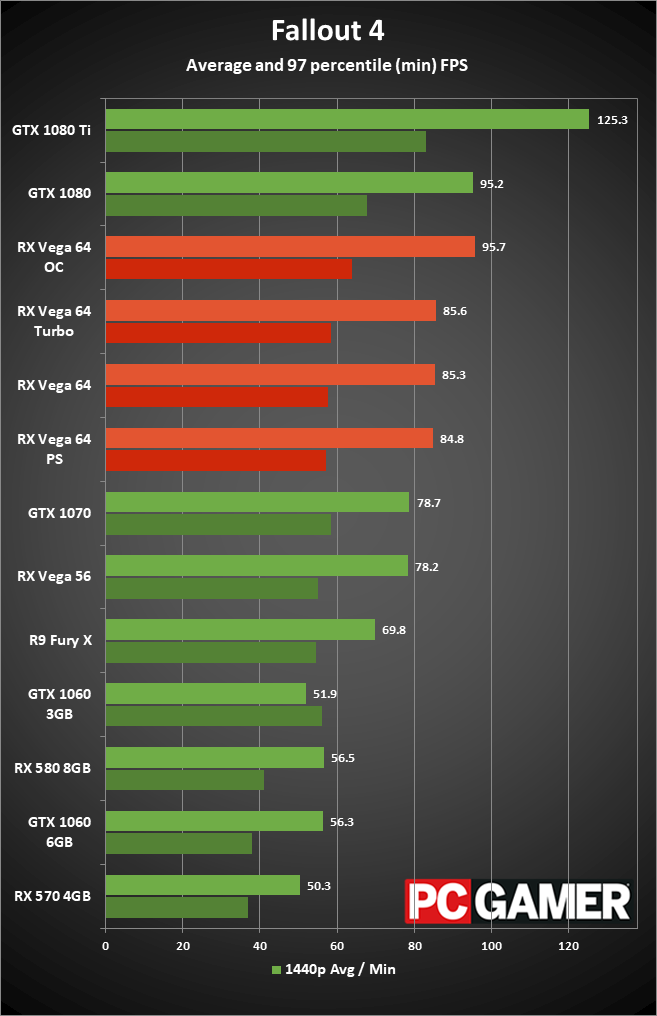

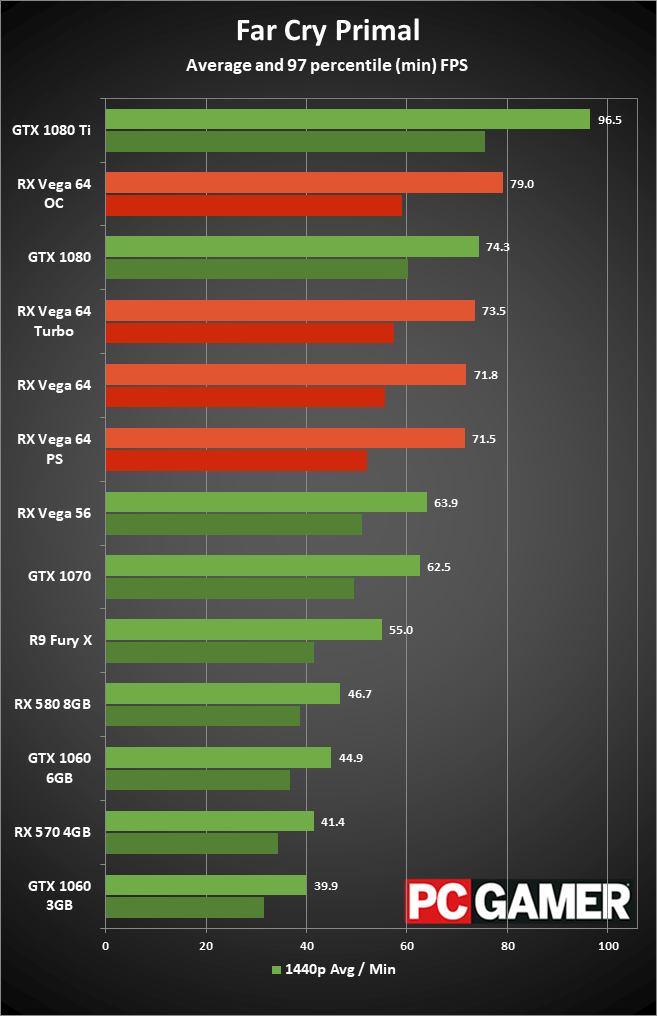

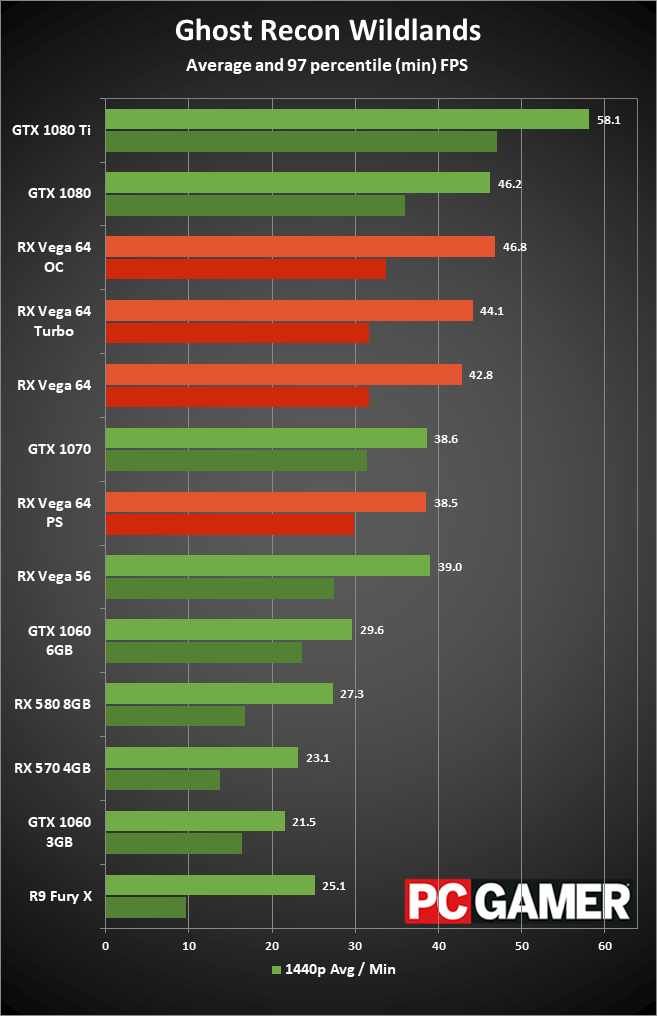

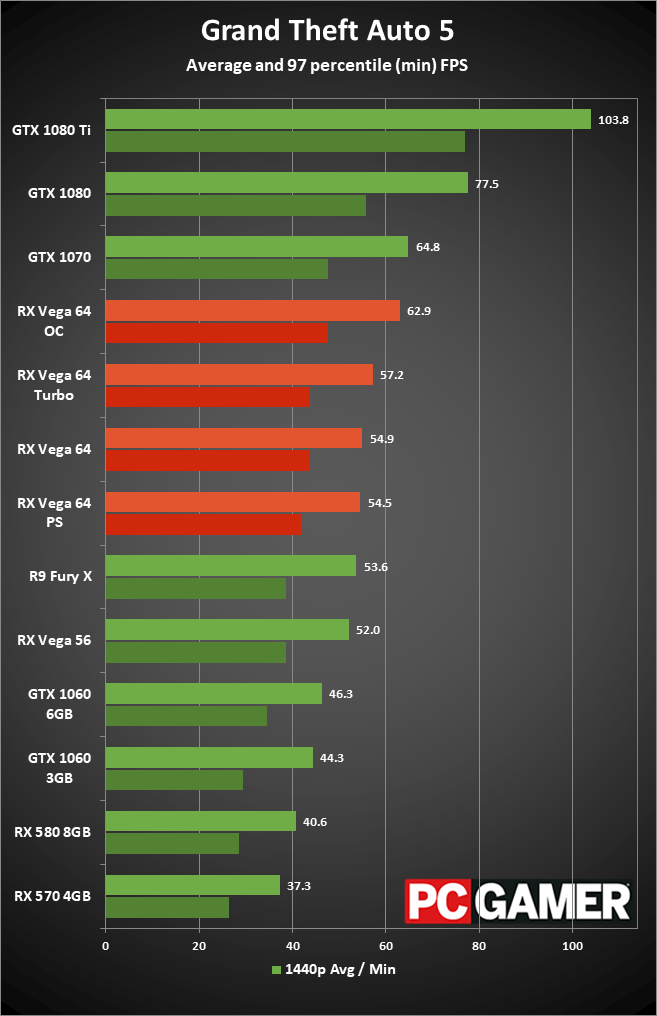

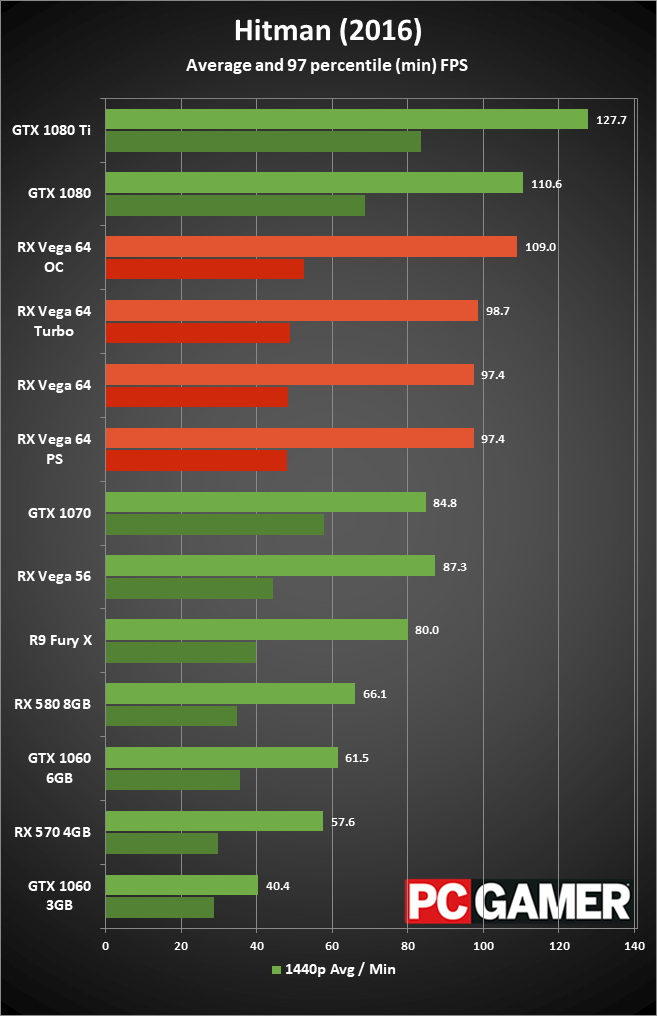

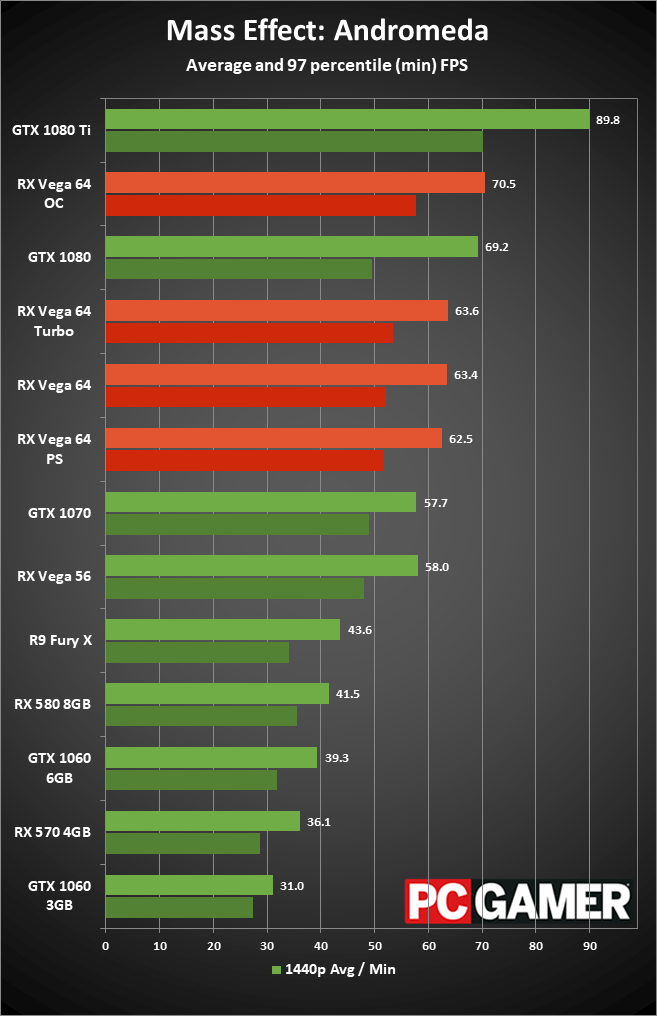

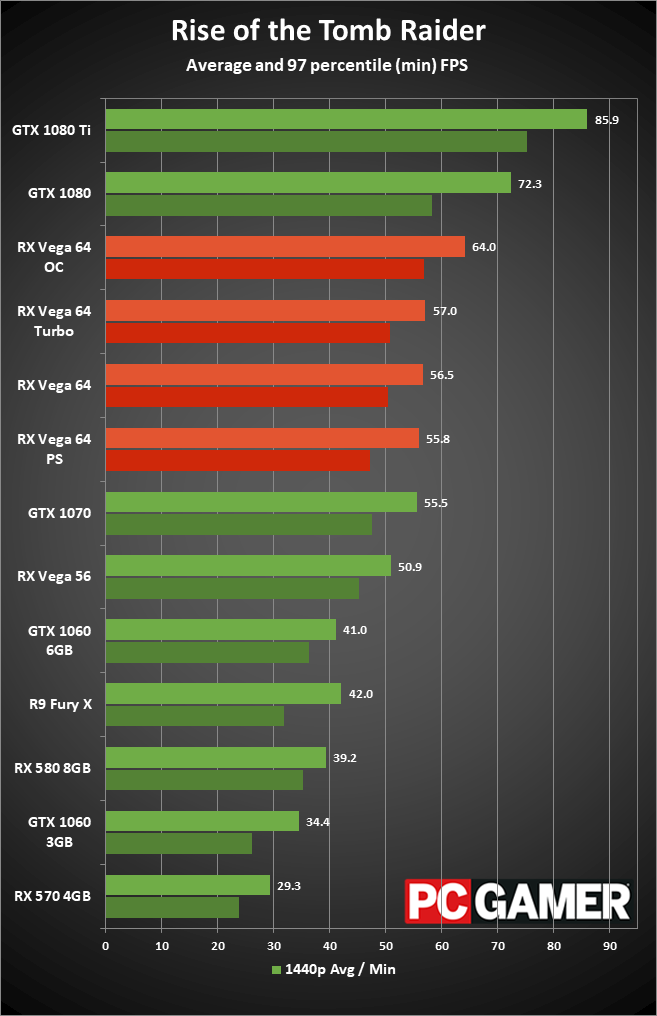

RX Vega 64 benchmarks and overclocking

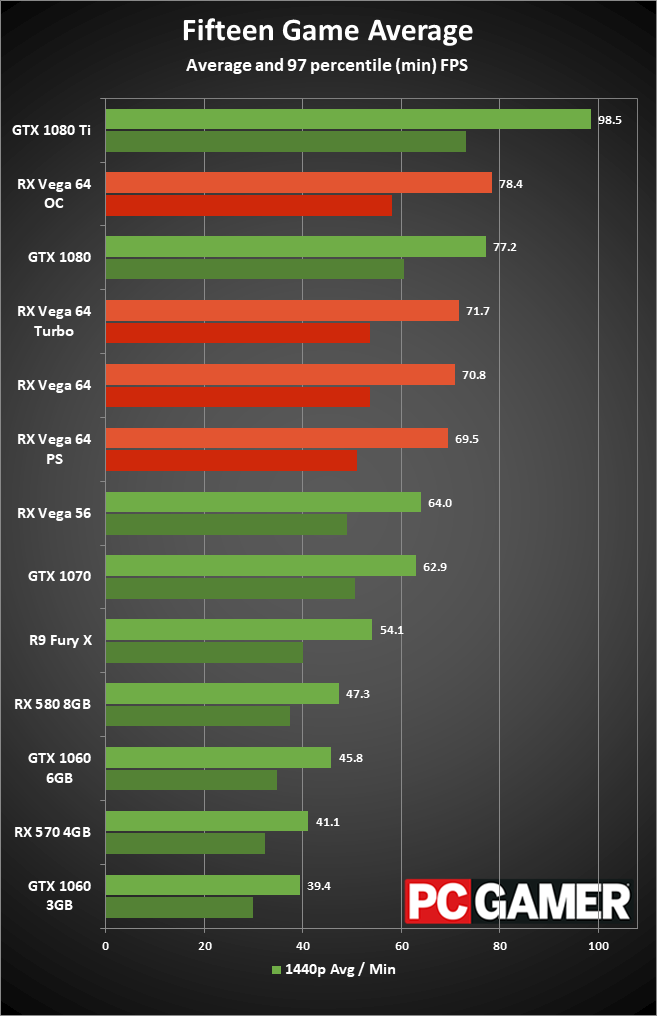

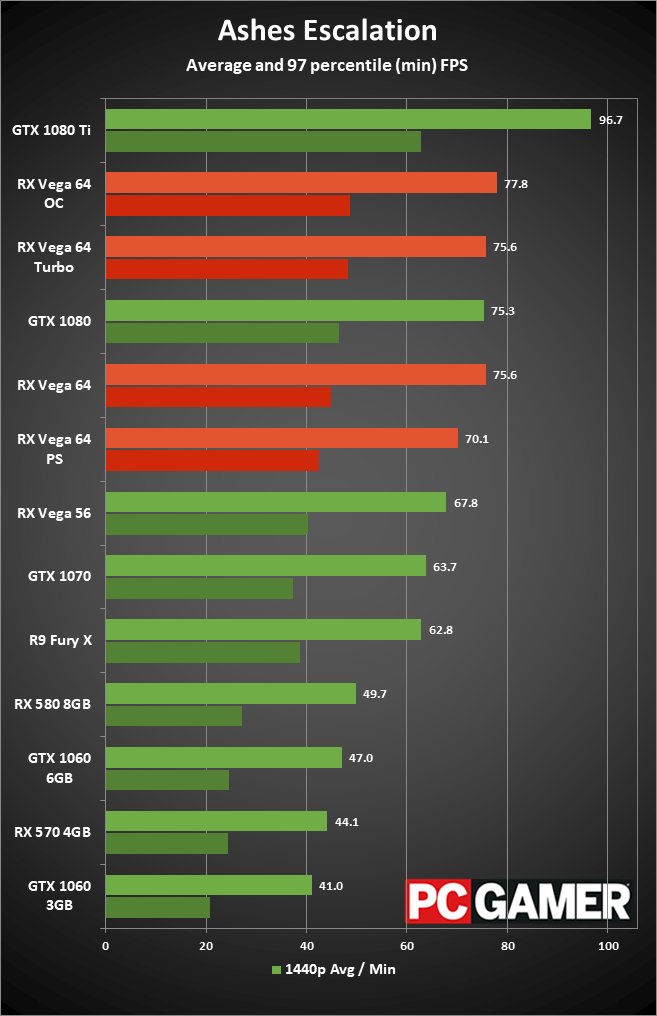

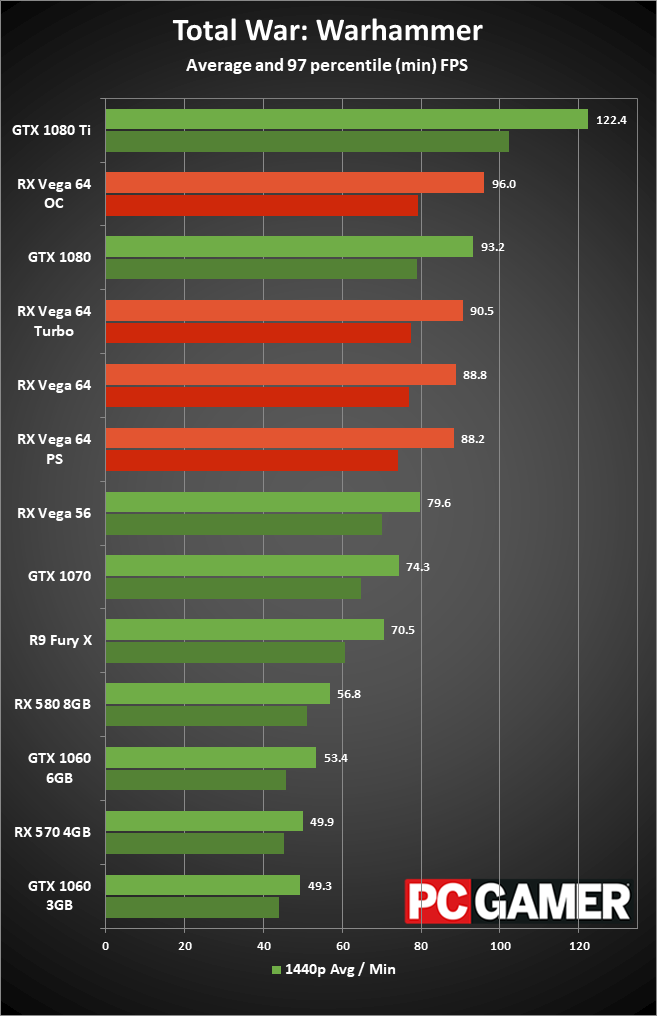

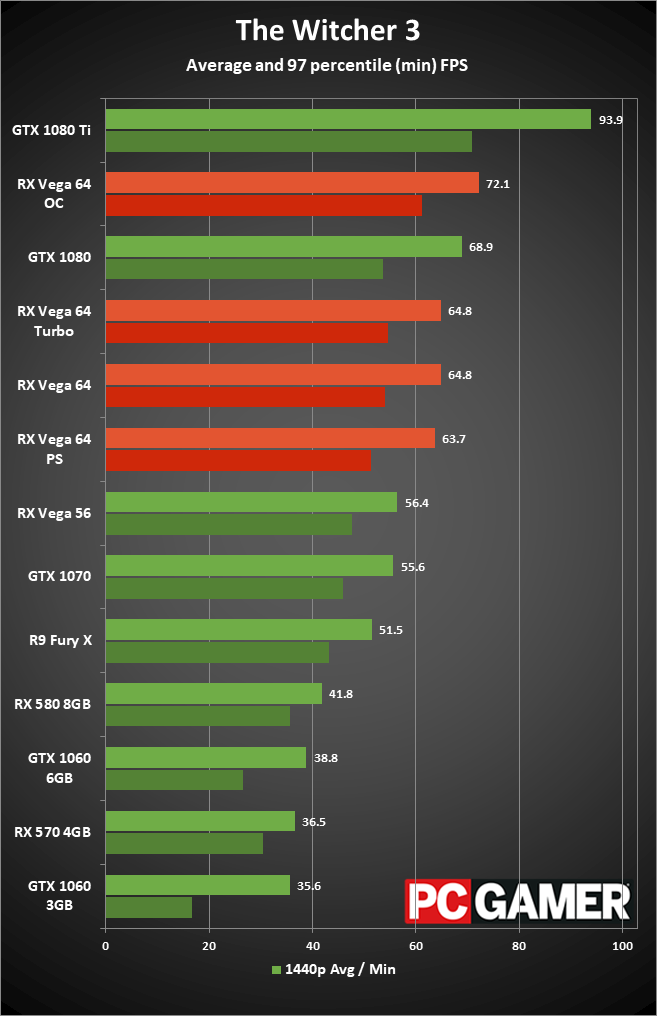

The charts below are similar to those posted earlier this week, only now I've focused specifically on 1440p performance, and I've added benchmarks for several additional configurations of the RX Vega 64. Besides stock performance (RX Vega 64), using the default "Balanced" WattMan profile, I've tested using the WattMan Power Saver profile (RX Vega 64 PS), which reduces the power use in exchange for lowered performance, and the WattMan Turbo profile (RX Vega 64 Turbo), which goes the other way and increases the power limit by 15 percent for potentially better performance.

The difficulty with the WattMan presets is that they don't adjust the temperature targets or fan speeds, and with the default fan speed capped at a relatively conservative value, the Vega 64 hits its thermal limits and starts reducing performance. For manual overclocking (RX Vega 64 OC), I've changed the fan profile to go from 3,000-4,900 RPM, increased the Power Limit to the maximum 50 percent, overclocked the HBM2 to 1025MHz base (524.8GB/s), and added just 2.5 percent to the GPU clockspeeds—I ran into crashes at many other settings, though don't let the small GPU overclock fool you, as the GPU clocks a lot higher than stock thanks to the fan speed.

I didn't include overclocked results for the other cards, as I just want to focus on the Vega 64's potential improvements here. Suffice to say, most of the Nvidia cards can improve performance by 10-15 percent with overclocking, and the same goes for AMD's GPUs (though some models fare better than others). The good news is that with additional power and higher fan speeds (or better cooling on a custom card), RX Vega 64 is able to pass the overall performance level of the GTX 1080 FE. The bad news: it uses a lot of power in this particular configuration.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

At stock, the Vega 64 (including system power) consumes 478W at the outlet—about 200W comes from the CPU and the rest of the system, so Vega comes pretty close to its 295W board power level in my test. (Other games did peak at slightly higher power draw, but I use Rise of the Tomb Raider for the power tests.) Switching to WattMan Power Saver drops power draw to 461W, while Turbo increases power draw to 518W. Other than a few specific games, like Wildlands and to a lesser extent Ashes, the WattMan profiles perform nearly the same, so you could use Power Saver and not miss out on too much.

Manually overclocking on the other hand—and I didn't specifically tune for performance per watt, going after raw maximum performance instead—can really take things to a whole other level. First, performance is up 11 percent on average, with Ashes being the only game that doesn't improve significantly. But power use… It's almost laughable how much power you can draw through the RX Vega 64. In Rise of the Tomb Raider, the system used 585W, and I noticed a few peaks in other testing of over 600W. To put that in perspective, you can run a pair of GTX 1080 cards in SLI and still use less power. The RX Vega 64 in my maxed out overclock is drawing well over 350W of power, all on its own. Yikes. Still, I'm impressed the cooling can keep up with the power draw, though the fan is definitely not quiet at the settings I used.

Does power draw really matter?

Obviously, Vega isn't going to win any awards for power efficiency, especially not when heavily overclocked. But for stock operation, where it's basically trading blows with the GTX 1080, how much does the increased power use really matter? The answer depends in part on what sort of system you're planning to put Vega in. For smaller builds, the increased power draw could become a factor—I wouldn't put Vega 64 into a mini-ITX system, but ATX cases should be fine. You'll need a higher wattage PSU, but if you already have a high-end desktop that meets all the other requirements? RX Vega 64 isn't substantially different from previous high-end GPUs like the R9 Fury X, or R9 390X/390/290X/290.

The real problem isn't the previous generation AMD GPUs, it's Nvidia's GTX 1080. There is nothing here that would convince an Nvidia user to switch to AMD right now. Similar performance, similar price, higher power requirements—it's objectively worse. In fact, the power gap between Nvidia and AMD high-end GPUs has widened in the past two years. GTX 980 Ti is a 250W product, R9 Fury X is a 275W product, and at launch the 980 Ti was around 5-10 percent faster. Two years later, the performance is closer, nearing parity, so AMD's last high-end card is only moderately worse performance per watt than Nvidia's Maxwell architecture. Pascal versus Vega meanwhile isn't even close in performance per watt, using over 100W (more than 60 percent) additional power for roughly the same performance.

It's difficult to point at any one area of Vega's architecture as the major culprit for the excessive power use. My best guess is that all the extra scheduling hardware that helps AMD's GCN GPUs perform well in DX12 ends up being a less efficient way of getting work done. It feels like Vega is to Pascal what AMD's Bulldozer/Piledriver architectures were to Intel's various Core i5/i7 CPUs. Nvidia has been able to substantially improve performance and efficiency each generation, from Kepler to Maxwell to Pascal, and AMD hasn't kept up with the pace.

How much does 100W of extra power really matter, though? Besides the potential need for a higher capacity PSU and improved airflow, it mostly comes down to power costs. If you pay the US average of $0.10 per kWh, and you game two hours every single day in a year, you're looking at an increase in power costs of just $7.30—practically nothing. Even if you're playing games twice as much, four hours per day, that's only $14.60. But if power costs more in your location, like $0.20-$0.30 per kWh, it starts to add up.

What about cryptocurrency mining?

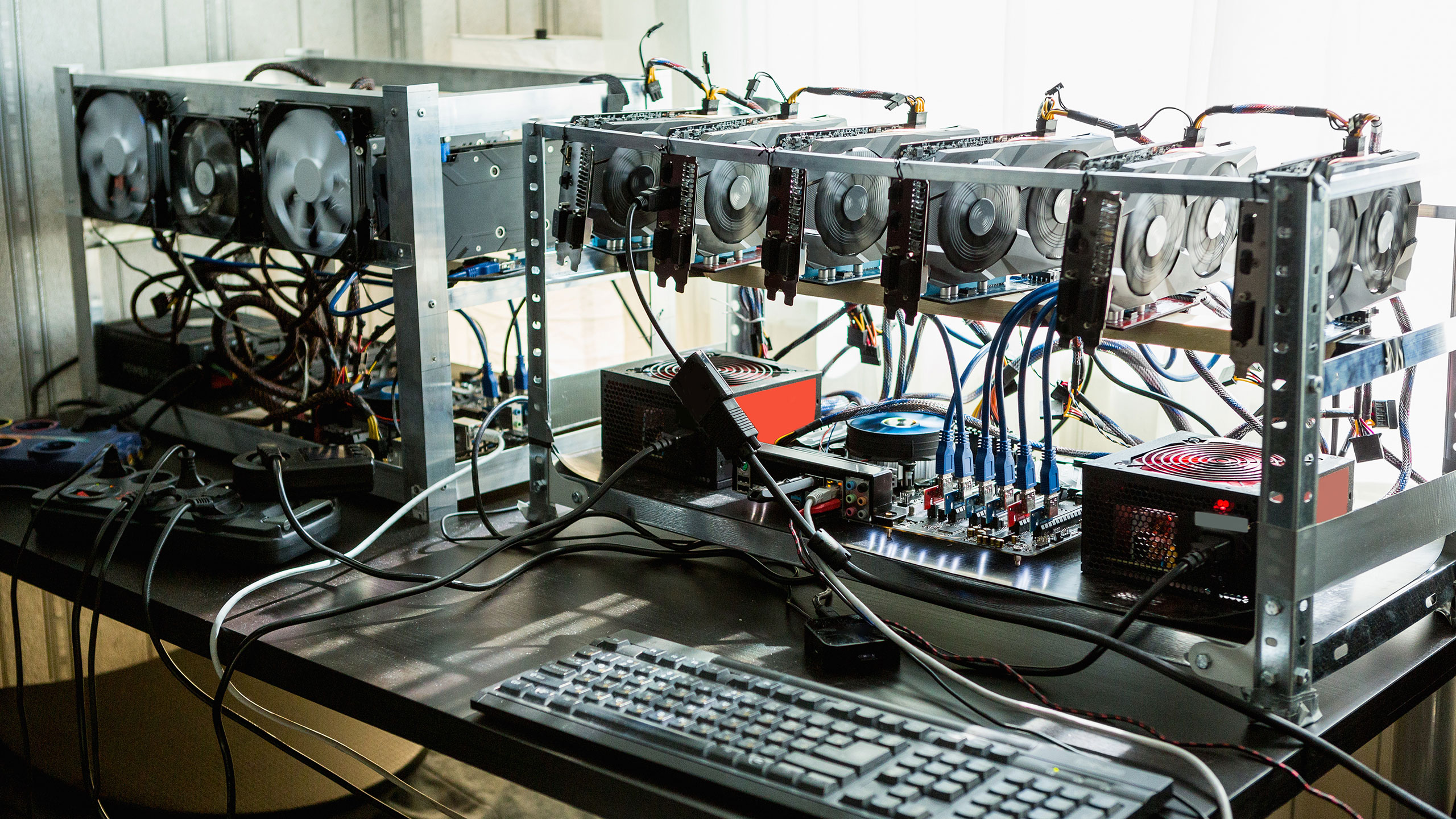

Mining with GPUs has become a big deal, thanks to the surging prices of Bitcoin, Ethereum, and many other cryptocurrencies. AMD and Nvidia have both talked openly about the effect of miners on graphics card pricing, and Nvidia notes that blockchain technologies aren't likely to go away. Vega 64 quickly went out of stock at launch, and it remains so in the US, but the initial supply was very limited. Given the pent-up demand from AMD gamers, plus the potential interest of miners (no doubt helped by rumors of 70-100MH/s performance on Vega), demand far exceeded supply, and I certainly wouldn't blame the shortages on miners. But how do the cards actually perform when it comes to mining?

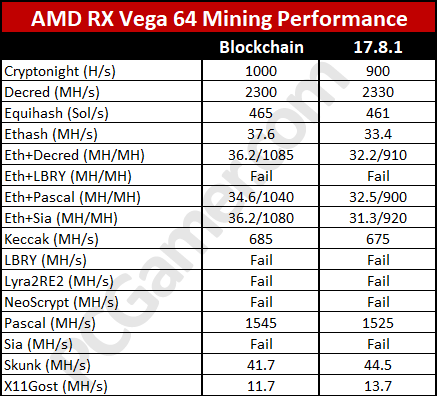

Ethereum often gets the limelight right now, but there are numerous hashing algorithms used for cryptocurrencies—Ethash, Lyra2RE2, Equihash, NeoScrypt, LBRY, X11Gost, Pascal, Blake (2b and 14r), and most recently a new one called Skunk. AMD has also released a driver that's 'tuned' for cryptocurrencies, or at least it's better than the generic graphics driver, so I wanted to check that out. Here's how things break down right now, using the blockchain driver compared to the original driver, on the RX Vega 64:

The blockchain driver (and updated mining software) definitely helps, in most cases—there are a few algorithms like Skunk and X11Gost that are slower with the new driver. Power draw in my testing remained relatively constant, at 375W for the system (about 95W for everything except the GPU). Increasing the fan speed, as with overclocking, can help a bit, and I'd definitely recommend it if you're setting up large-scale mining, as otherwise Vega 64 can run quite hot.

But the bottom line for mining is typically profitability—investment versus return. And right now, RX Vega 64 looks pretty mediocre, though I'd say the same for any GPU purchased for mining purposes right now. Vega 64 can currently net around $3.30 per day, but after power costs it ends up at $2.60 in profit (nearly the same as GTX 1080). Given power use and pricing, overall miners would be better served (for now) by going with GTX 1060 3GB cards, which are readily available. Like the GTX 1080 and 1080 Ti, Vega 64 is priced too high to be a compelling option for miners.

Since I'm on the subject, let me briefly touch on one other area of AMD graphics, driver support in general. AMD's Radeon Software Crimson (and now ReLive) drivers have been good in my experience, with relatively few major problems. But have they matched Nvidia's GeForce drivers? Almost. ReLive takes on ShadowPlay, and extras like Chill and Enhanced Sync are nice to see. But it ultimately comes down to performance, and here it remains a bit of a mixed bag.

For DX12 and Vulkan games, AMD hardware performs great, often beating the Nvidia equivalents. The problem is, DX12/Vulkan represent only a small portion of the gaming market, and for every DX12 game where AMD does proportionately better than average, there are multiple DX11 releases where AMD falls short. I've mentioned this repeatedly in our Performance Analysis articles—in games like Overwatch, Battlegrounds, Mass Effect: Andromeda, and more, particularly at launch, AMD hardware tends to underperform. Even with launch-day driver updates for games, new releases tend to favor Nvidia.

You're late, but welcome back to the high-end, AMD

There are multiple ways of looking at AMD's RX Vega 64. Forget for a moment the lackluster supply and limited availability, or the higher-than-expected pricing thanks to most cards being sold as part of the Black Pack. If you buy Vega 64 as a standalone card for $499, it's plenty fast for gaming purposes, and it's AMD's fastest graphics chip ever. Period. 1440p gaming using a FreeSync monitor is a great experience overall. If that's what you're after, wait for Vega 64 to get back to MSRP (or hold off for the Vega 56 at $399, which will also go out of stock at launch), and you're set. That's the best way of looking at Vega 64.

Unfortunately for AMD, Vega doesn't exist in a vacuum. Nvidia's Pascal architecture launched last May, and it continues to be very potent. We've had RX Vega 64 performance available from Nvidia since the GTX 1080 arrived, and even today it's still a bit faster and it uses a lot less power—and it even costs less, thanks to the current Black Pack pricing. RX Vega 64 is late to the party, and to make matters worse, it thought this was supposed to be a costume party and showed up wearing a Power Rangers outfit. The other attendees are not impressed. But at least AMD is showing up in the high-end discussion, which is better than being MIA.

Mining is an area where Vega seems to have a lot of untapped potential—and there are still hints that future drivers/firmware could improve Vega mining performance by 50 percent or more—but that's not an area of long-term, sustainable growth I don't think. Blockchain technologies may not go away any time soon, but graphics cards need to be good at gaming first—otherwise, gamers go elsewhere, Nvidia increases its market share, and game developers begin to optimize even more for Nvidia over AMD.

RX Vega 64 ends up as AMD's insane muscle car version of the Vega 10 architecture. It's fast and flashy, and it can burn rubber and make a lot of noise…but there are more refined sports cars on the track, that handle better and don't need to come in for a pitstop to refuel quite so often. If Vega had launched at close to the same time as Polaris and Pascal, it would be far more impressive. But running 15 months behind the competition only to deliver similar performance while using 50 percent more power? That's worse in some critical areas, and it will take more to convince gamers that this is the best way forward.

AMD's fastest graphics card ever, but we wanted it last year.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.