AMD LiquidVR vs. Nvidia VRWorks, the SDK Wars

A rose by any other name….

One of the keys to enabling a great VR experience is providing low-latency input and display updates. While the core concepts of VR have been around for quite a while, most of the early attempts—particularly in the 80s and 90s—were plagued by low resolution displays with relatively high latency. Graphics fidelity has obviously come a long way since Lawnmower Man (1992), though many of the tech demos and early games we're seeing today aren't going after photo-realistic graphics. But in all cases, keeping frame rates high and consistent and latency low is the name of the game.

As the primary graphics companies in the PC space, AMD and Nvidia are both heavily involved with VR, and they both have SDKs (Software Development Kits) to help game developers create the best VR experience possible. For AMD, their SDK is LiquidVR, and Nvidia has VRWorks. Besides these two SDKs, there are also SDKs for Oculus, Vive, and other platforms. There's plenty of functionality overlap in the SDKs, and for the end users it shouldn't matter too much what's going on behind the scenes, as long as the final product works well, but the technological details are still interesting. Each SDK provider has specific goals, and broadly speaking there's no single SDK or library that functions as a superset of all the options, so let's take a high level look at the competing options. Our focus will primarily be on the AMD and Nvidia options here, as the headset vendors are generally working with AMD and Nvidia when creating their own solutions.

AMD's LiquidVR SDK

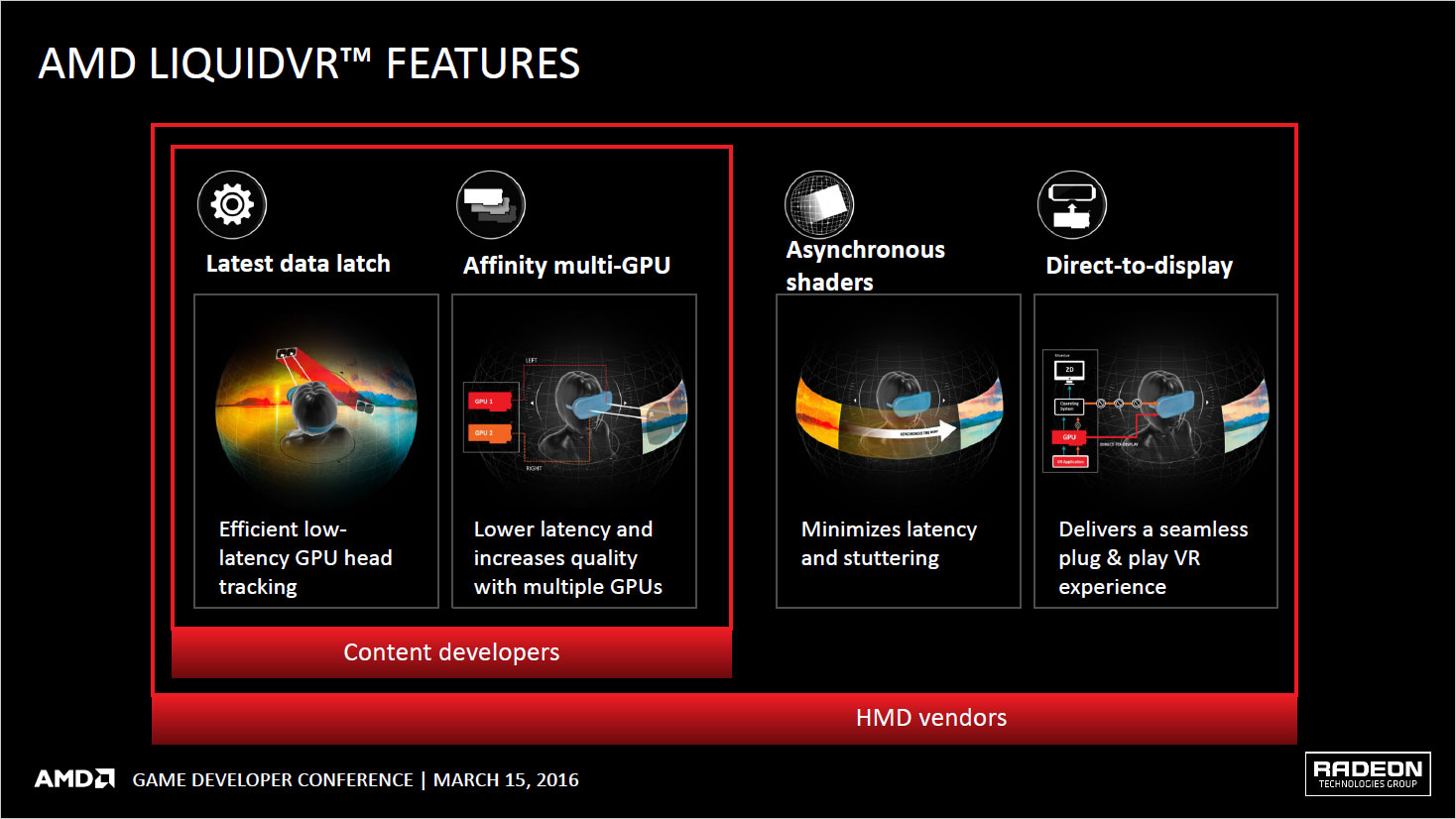

Starting with AMD's LiquidVR (because, hey: alphabets!), there are four key features that AMD currently highlights. All of these are supported via AMD's GPUOpen initiative, meaning full source code is available on Github, and developers are free to modify and use the code as they see fit. It's difficult to say any one feature is more or less important than the others, but the central theme is latency reduction.

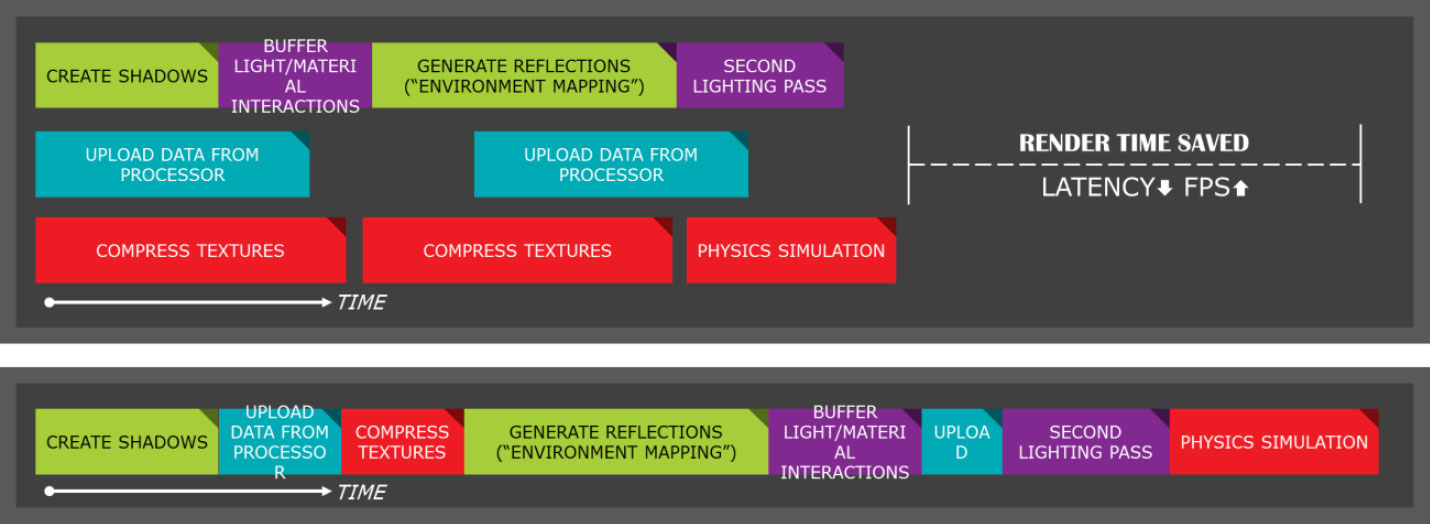

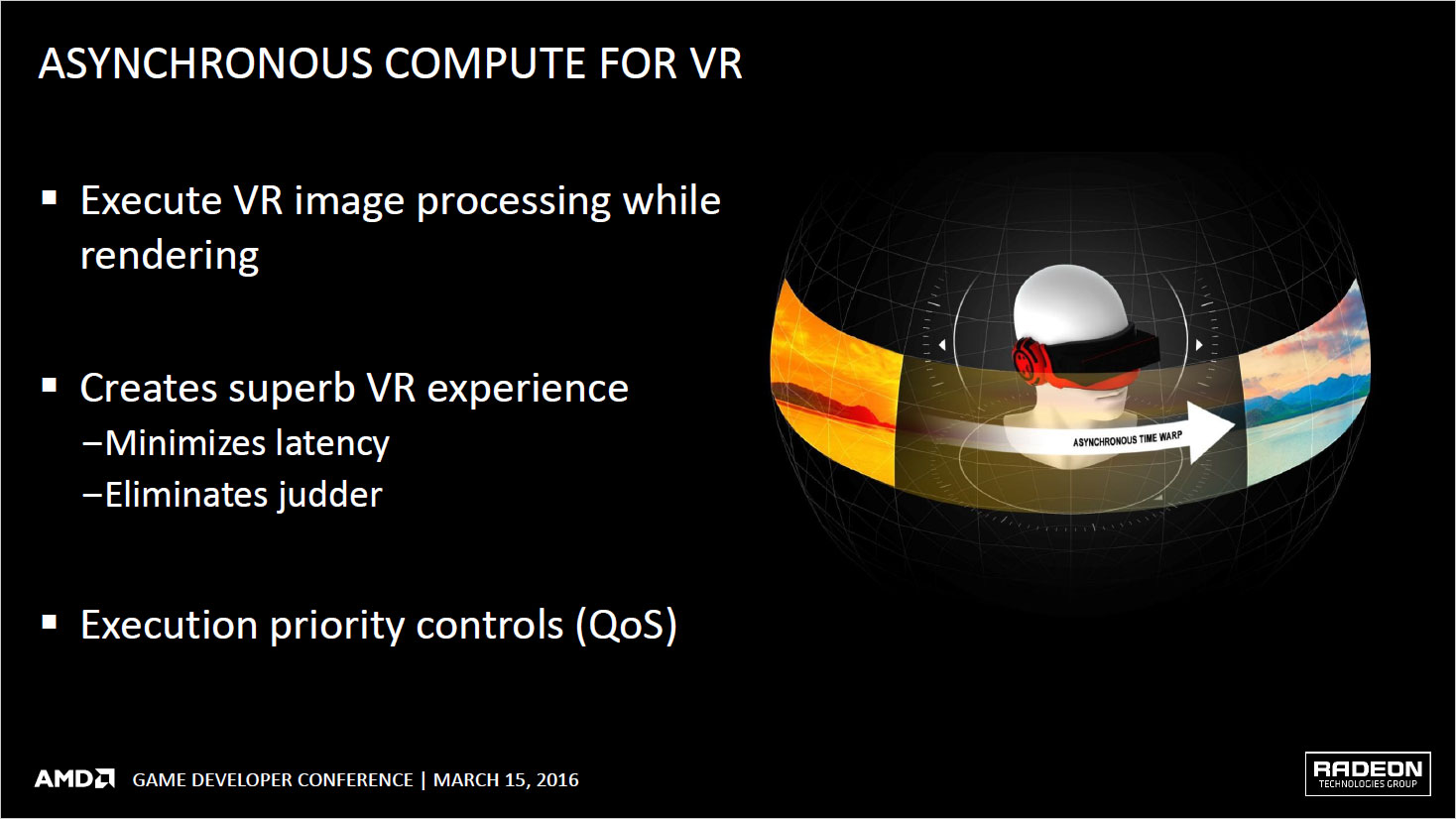

What you'll find with the various VR SDKs is that similar technology goes by a variety of names. The end goal is usually the same, though the particulars of the implementation may vary slightly. We'll start with the big differentiator before getting to the techs that are common to the vendors. For AMD, the big item that they can point to is their Asynchronous Compute Engine (ACE), or async shaders. Originally introduced with the very first GCN architecture in late 2011 with the HD 7970, async shaders are fundamentally about providing flexibility to command scheduling. Most GPUs treat compute and graphics commands as separate and distinct tasks, but ACE provides the ability to run both compute and graphics work concurrently.

This didn't really gain a whole lot of attention back in 2011/2012, basically being viewed as a way for the GPU to efficiently schedule work for the Compute Units (CUs). At a low level, GCN is an in-order processing architecture, but the ACE scheduler can reorder things to make better use of resources. Jump forward to the modern day, however, and async shaders have become potentially much more useful. Now it's possible to mix and match compute and graphics commands in the same instruction stream, and the ACE will queue things up for the appropriate execution units. DX12, Vulkan, and other low-level APIs end up being more flexible thanks to the presence of async shaders.

Without ACE or some similar feature, other GPUs are forced to execute a context switch when moving from graphics to compute, which involves saving a bunch of state information and then changing command streams, preempting the current workload to run a different workload, followed by restoring the original state. All of this takes time, and VR is highly susceptible to latency issues. What we don't know precisely is how much time is required to execute a context switch, but more on that later. The bottom line is async shaders make it easier and more efficient to do certain tasks, and the biggest benefactor is likely Asynchronous Time Warp (ATW).

ATW at first sounds like a crazy idea; it was apparently first proposed and implemented by graphics programming legend John Carmack (Wolfenstein 3D, Doom, Quake, etc.) when he was working with the GearVR. With all the telemetry data coming from the system (telemetry being the position and the direction the user is facing, along with potentially other tidbits from the controllers), the immersive aspect of VR can be seriously compromised by latency. Imagine a worst-case scenario where you're trying to run a game with a 90Hz display, so you have 11ms to complete the rendering of a frame before it needs to be presented to the viewer. If you miss that deadline, the last frame gets repeated, effectively dropping the experience to 45 fps instead of 90 fps. Cue the nausea and simulation sickness. ATW helps in two ways.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

First, let's take the instance of a 90Hz display where occasionally the next frame is ready just a bit too late—maybe the system is capable of 89.9 fps averages, with most frames ready in time but a few that finish rendering just a moment too late. This creates a hitching effect on the repeated frames, and ATW uses some black voodoo code to "warp" the last frame and try to fudge the difference between when it was completed and the current telemetry information. Now if the telemetry is changing rapidly, ATW isn't going to work that well, but when you're running at 90Hz updates, there will be a lot of cases where telemetry has only moved a little bit. With some clever math, you can approximate this rather quickly and fake an intermediate frame. Presto: lower perceived latency, happier users, and no puking!

If that sounds like a great idea—and pretty much all the major developers agree that it is—the second way ATW helps is that rather than only warping old frames in cases where a new frame isn't ready, it can simply warp every single frame. So all the rendering takes place, and that can take somewhere around 8-9ms, and then right at the end before updating the display, you grab the telemetry data and do a time warp for the past 8ms. In many cases the resulting image will hardly change at all, but for fine-grained movement the perceived latency is now well under 10ms.

Now here's where AMD's async shaders come into play. ATW is a compute shader that can be scheduled right along with all the other graphics tasks, and there's no need to preempt other graphics processing to make it happen. Without async shaders, ATW needs to take priority over other work, which might mean a graphics context switch. If that happens at the appropriate time, all is well and ATW works as expected, but in a worst-case scenario the preemption takes too long and the window for warping and updating a frame is missed, leading to a repeated frame and some stuttering in the VR experience.

AMD's Latest Data Latch is related to ATW, and it's a technique to reduce motion-to-photon latency, or in other words the time from when the HMD (Head Mounted Display, aka the VR headset) moves to when the display reflects the movement. The way normal rendering engines work, the CPU sets up a bunch of stuff for the GPU, including the viewport position. This gets passed to the GPU, which then renders the scene, and then the final output is sent to the display. Latest Data Latch changes things by letting the GPU do all of the work up until the point where it's time to actually start rendering pixels; the geometry and other engine work is done first, then the current positional data gets copied right before the frame is rendered. ATW can then take place with another set of updated data after the rendering, the final result being reduced opportunity for disorientation or "simulation sickness."

LiquidVR's Direct-To-Display feature is designed to prevent the OS from screwing with display updates. VR headsets are basically a new class of display that doesn't really have proper OS support, particularly in older OSes like Windows 7 and 8. Where normal computer displays are used while the system is booting and for other non-gaming tasks, VR displays aren't useful until you're actually running the VR simulation, which is a full screen experience. Having the OS get in the way trying to muck about with windows or other non-essentials wouldn't help, and Direct-to-Display is designed to eliminate that sort of undesirable behavior. Direct-to-Display also has other features, like enabling direct rendering to the front buffer, which can significantly reduce latency. Normally, in a double-buffered arrangement, rendering is done to the back buffer and when it's complete, a buffer swap occurs—synchronized to the screen blanking (aka V-sync). At 90Hz refresh rate, that means potentially 11ms of additional latency, worst-case, so Direct-to-Display becomes very beneficial.

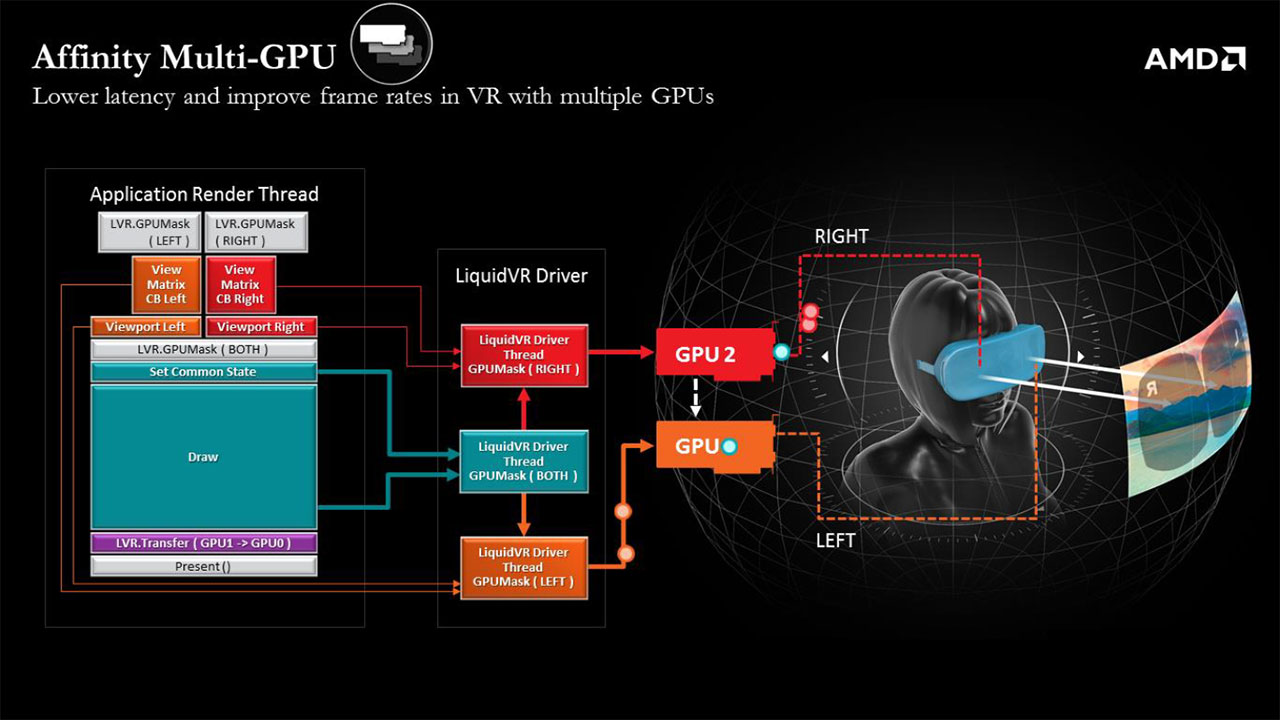

Last but not least is Affinity Multi-GPU, which aims to improve performance of CrossFire systems. One of the major problems with multi-GPU and VR is that the traditional AFR (Alternate Frame Rendering) modes used by AMD and Nvidia increase latency. Affinity Multi-GPU helps to combat this by assigning one or more GPUs to each eye and intelligently partitioning the workload. AMD states that Affinity Multi-GPU can cut latency nearly in half while doubling bandwidth and computational resources. One thing that isn't clear is how well Affinity Multi-GPU scales beyond two cards; it's easy to see how assigning one GPU to each eye works, but using three or four GPUs will be more difficult. Much like explicit multi-gpu rendering, first seen in Ashes of the Singularity, developers will have to decide how to best allocate resources in multi-GPU configurations.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.