AMD announces Radeon Instinct

MI6, MI8, and MI25 Machine Intelligence accelerators and a preview of Vega.

This past week, I was able to attend the AMD Tech Summit, a now-yearly event where AMD details some of its upcoming products and frequently demonstrates working prototypes. There's more to come from the summit, as AMD has various embargo deadlines spaced out over the coming month, but today we can officially talk about a new brand: Radeon Instinct.

Radeon Instinct is effectively AMD's alternative to Nvidia's Tesla line of products, though the previous FireStream (discontinued in 2012) and FirePro lines competed in this market as well. What makes Instinct different is primarily the focus on machine intelligence, which in this case equates to running the calculations required for deep neural networks. I spoke with a software developer from a company working on developing AI software solutions, and his summary of what AI requires is pretty simple: it uses tons of multiplication. That's why it's such a great fit for GPUs, since they have large clusters of cores that are able to do FMA (Fused Multiple Add) operations.

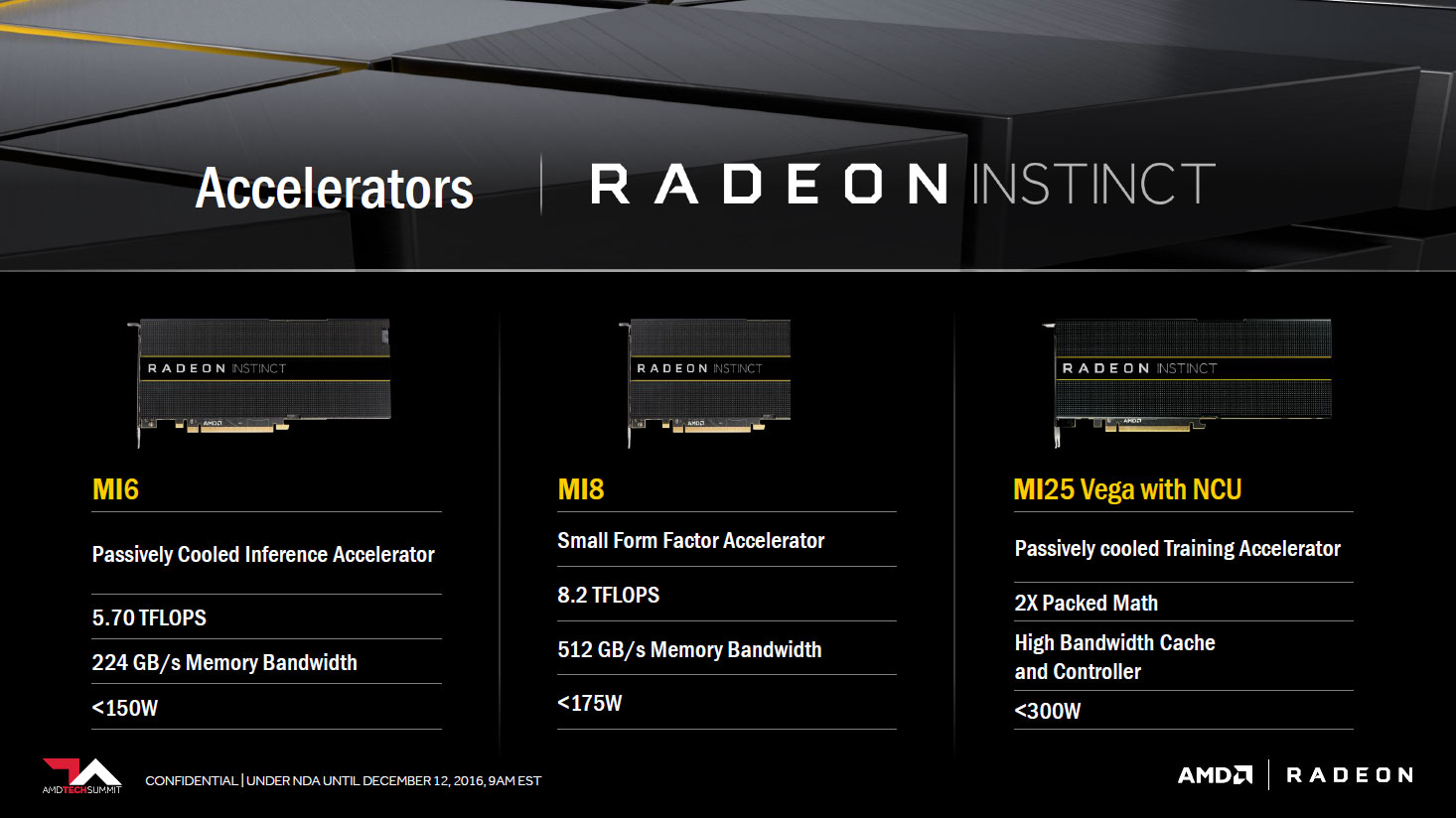

AMD unveiled three new Radeon Instinct products slated for release in 2017. The MI6 is based off the Polaris 10 architecture, and is capable of up to 6 (5.7) TFLOPS of performance in a sub-150W passively cooled form factor—basically, this is an RX 480 without a fan and with slightly tweaked clocks and perhaps a few other items. (These are products designed to go into server racks where the cooling is provided by hot-swappable high RPM fans, so the cards aren't actually passively cooled in practice, but the cooling comes from fans that aren't part of the core product.) Next up the chain of performance is the MI8, based off the Fiji architecture and capable of 8.2 TFLOPS of performance in a sub-175W product. As with the MI6, this is effectively the same card as the R9 Nano, this time likely with the same 1000MHz maximum clock speed.

The real star of the show is the third Instinct card, the MI25. Oddly, AMD was very careful not to explicitly call this a 25 TFLOPS product, but given the name and other information, that's precisely what it is. Unlike the other two accelerators, the MI25 will use AMD's not-yet-launched architecture, Vega. There was a lot of other information presented on Vega at the summit, but unfortunately that's under embargo for the time being. What we can say is that it uses 'packed math' that provides double the instruction throughput per clock compared to the other two cards, which is how it gets to 25 TFLOPS. It will also be a sub-300W part with a high-bandwidth cache and controller.

Arguably the more important factor in all of the Radeon Instinct parts will be how they compete with Nvidia's Pascal-based offerings, the Tesla P100, P40, and P4. The P4 is designed primarily as an 'inference accelerator,' meaning the training takes place on more powerful GPU clusters while the inference can be done on the P4—this is similar to how AMD is pitching the MI6 and MI8. It uses the full GP104 with 2,560 FP32 CUDA cores, the same chip as the GTX 1080 but at substantially lower clocks (810-1063 MHz) and with 6GT/s GDDR5, giving a final performance of 5.4 TFLOPS (boost). P40 meanwhile uses GP102, with 3,840 FP32 CUDA cores at 1303-1531MHz with 7.2GT/s GDDR5, yielding up to 11.8 TFLOPS.

The P100 is the wildcard, coming in three varieties. The top model uses a mezzanine connector (NVLink) with 3,584 CUDA cores and 16GB HBM2 memory giving 732GB/s of bandwidth, with a maximum throughput of 21.2 TFLOPS. Of note is that both GP104 and GP102 lack double-performance packed FP16 support, something only present on Nvidia's GP100 architecture. If you're doing FP32 (something not typically used in machine learning), P100 tops out at 10.6 TFLOPS. Oh, and it uses 300W. If we focus on the halo matchup of Tesla P100 against Instinct MI25, things get pretty interesting. AMD potentially wins by around 18 percent on pure TFLOPS per card, but MI25 will primarily ship in a PCIe format (unless something changes). What that means for servers and datacenters however is far more interesting.

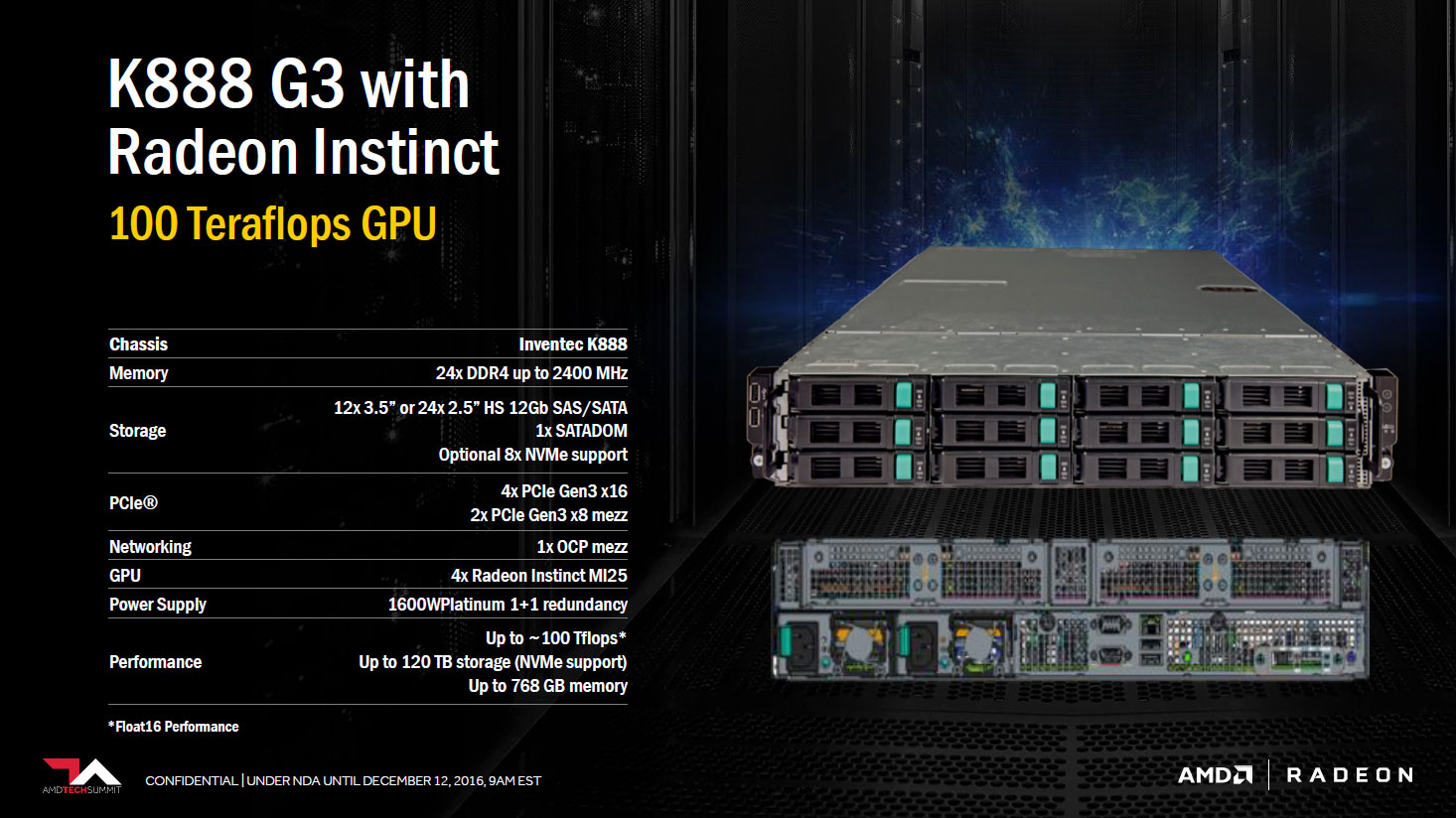

A standard 2U configuration with four MI25 cards can provide 100 TFLOPS of compute. AMD had their partner Inventec at the summit, showing off their K888 G3 in precisely that sort of configuration. But while 100 TFLOPS in 2U is cool, Inventec can do much better.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

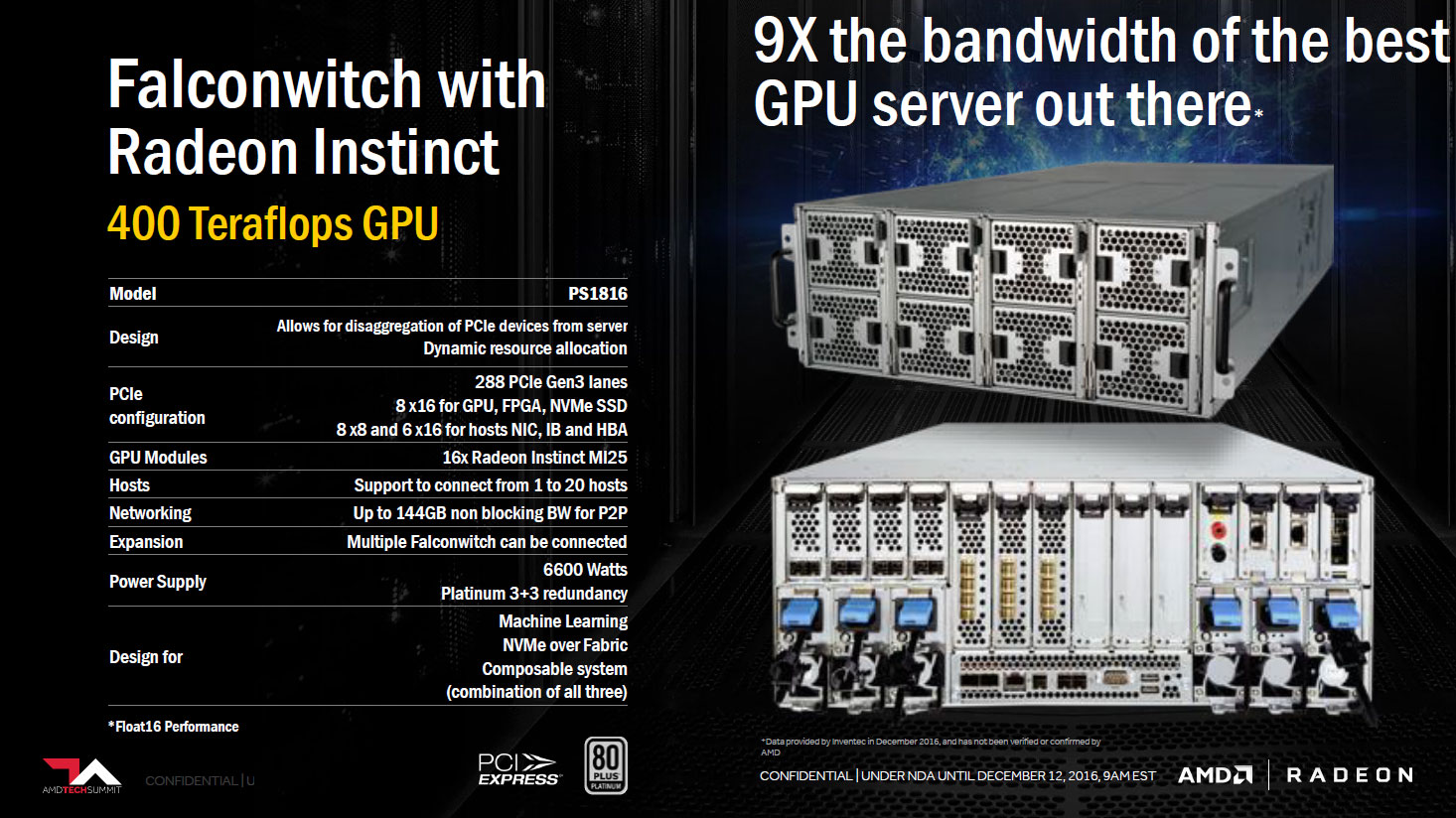

Their PS1816 'Falconwitch' chassis packs up to 16 MI25 modules into a 4U chassis, yielding 400 TFLOPS of compute.

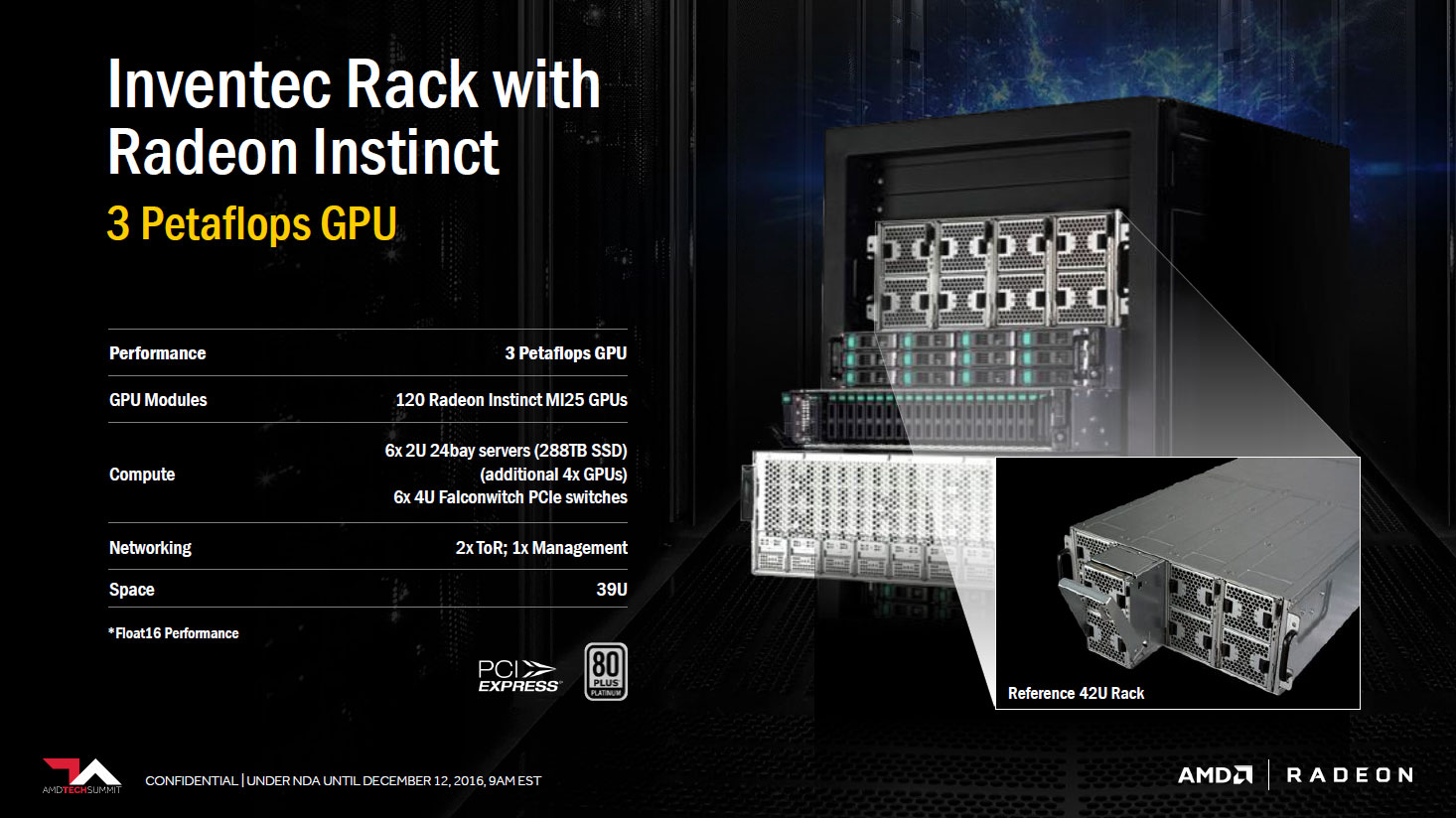

And if you want to fully populate a server rack (42U), Inventec is working on a rack that combines six Falconwitch servers with six more 2U chassis (presumably K888), giving 120 MI25 cards in a single rack, complete with networking and management features. (Note on the above slide that here AMD explicitly states 'Float16 performance.') Here's where hyperbole may have gotten the better of AMD's Raja Koduri, because he said at one point, "And the whole rack will cost less than a single DGX-1 server." To put that in perspective, we're talking about twelve servers plus RAM, with 120 MI25 GPUs (Vega), for under $129,000. Of course if Raja was talking purely about the cost of the MI25 cards, I could see them hitting this mark.

3 PFLOPS in a rack is an amazing figure, no matter how you slice it up. The fastest supercomputer in the world right now, the Sunway TiahuLight, has a peak rating of 93 PFLOPS. That system uses a custom ShenWei SW26010 processor, with 40 'supernodes' composed of two server racks each—and some would argue the SW26010 isn't as flexible as the GPU clusters used in other supercomputers. Regardless, using racks of MI25 clusters could potentially cut down the total size of the TaihuLight by half—or double the performance in roughly the same size (accounting for networking, storage, and other aspects).

But don't get too excited just yet. While MI25 sounds great, at present AMD is severely lacking in presence for high-end supercomputers—AMD (Radeon) graphics chips are used in just one of the top 500 supercomputers now, compared to sixty using Nvidia chips. CUDA also has a major presence in the machine learning field, where AMD is trying to gain market share with their new MIOpen and ROCm initiatives.

The Radeon Instinct image is certainly fitting, as AMD definitely has some mountains to climb in this area. Radeon Instinct at least on paper looks promising, and from the pure compute perspective it can potentially surpass Nvidia's current best. We'll find out if the hardware, software, support, and market uptake are ready in the coming months and years.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.