Giving AI rights 'would complicate things enormously'

Especially when sentience is not a binary construct, and we're the idiots over here failing the AI mirror test.

Imagine you've just seen a mirror for the first time. As a sentient, self-aware being, it shouldn't take long for you to recognise that the person you're spying in the mirror is, in fact, that hunk of meat you've been carrying around since birth. It's you. Your reflection, staring back at you. Had you been unable to recognise yourself, we could safely assume you were a fish.

You would be considered somewhat less self-aware, less sentient than the average human, as per our current understanding of consciousness. And yet, as a species witnessing the dawn of 'artificially intelligent' machine learning algorithms, it seems we are collectively failing that mirror test when presented with AI chatbots.

Rather than recognising AI as simply a reflection of the developers who coded them, and the datasets they were trained on, some folk are out there having a collective crisis over the idea that AI is now suddenly sentient and deserves human rights. And while I'm all for starting a modern dialogue around the theory of consciousness and surrounding morals so we can avoid a Blade Runner-esque dystopian nightmare, I think we may be jumping the gun a little.

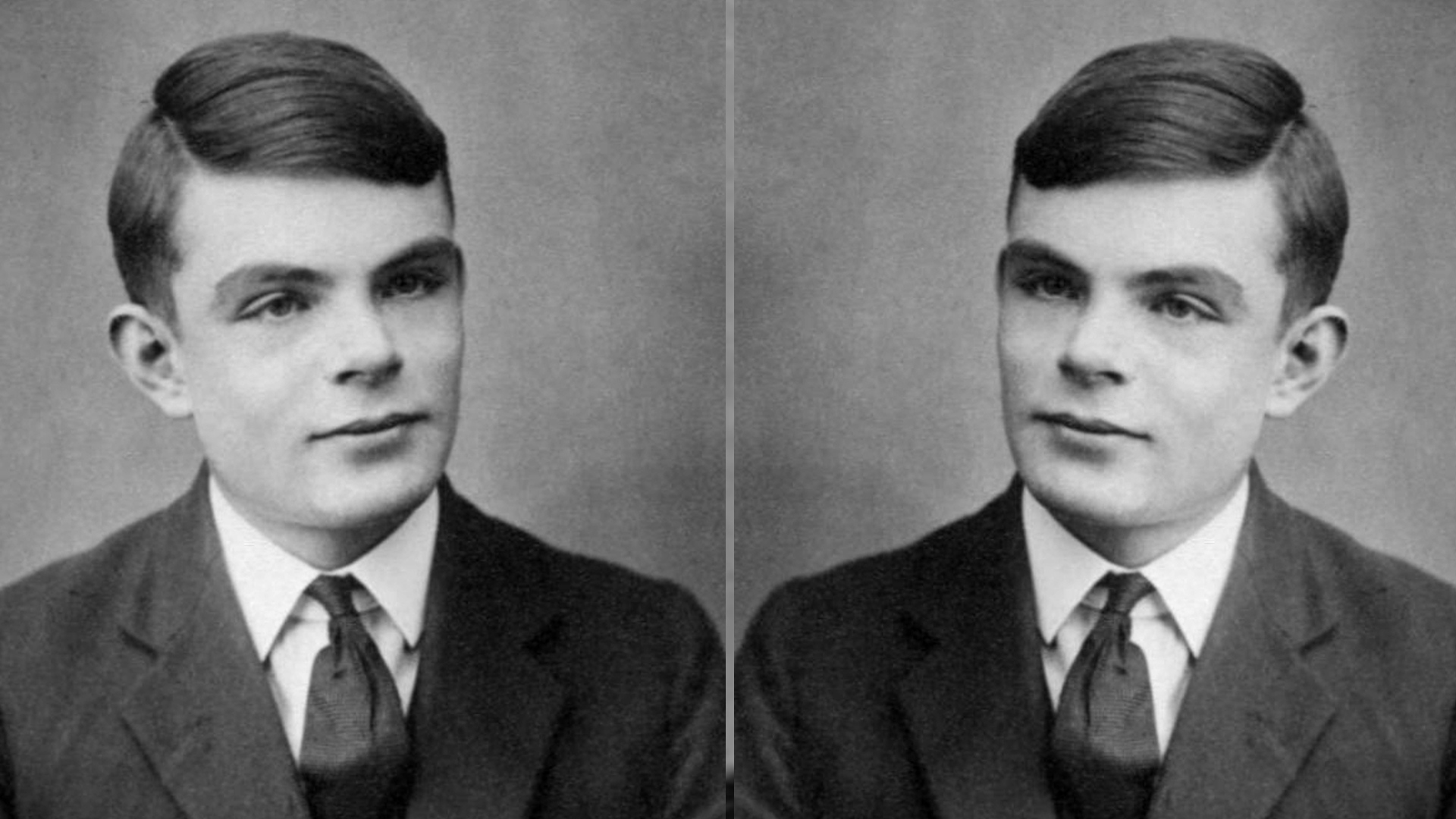

Back in 1950, Alan Turing devised a way to measure intelligence in machines in that, should they manage to fool humans into believing they are dealing with another human, the bot would be heralded as an intelligent being. While it's true that a few Large Language Models (LLMs)—such as those used by ChatGPT and Bing's new AI search chatbot—have passed the Turing test, we can't automatically drop everything and hold a banquet to celebrate discovering a new form of life.

Since the '50s our understanding of intelligence has shifted somewhat, exemplified in the fact that now some human beings don't even pass the Turing test. Artists, for example, are having their work banned because it looks too much like AI art. And while I think it's great that this new understanding is prompting conversations around AI and sentience, there's a real science fantasy element to it all—one that's endangering our own intelligence. When even a super intelligent human Google engineer thinks AI has become sentient, we have to step back and ask what that really means. And grounding ourselves starts with understanding that there are several degrees of sentience.

Consciousness is not a binary thing, it exists within a vast spectrum with an unfathomable number of factors affecting its manifestation, as well as how we perceive it. Similarly to sex, gender, emotion, and a whole host of other biological and metaphysical experiences, sentience doesn't flip over from zero to one just because an AI had a meltdown.

When even the word 'robot' itself implies a kind of sentience, let alone the misnomer of artificial intelligence, it's no wonder we're seeing consciousness in every ChatGPT mishap.

Though if you have seen yourself caught up in the fantasy of AI becoming sentient, don't be so hard on yourself. I was there too not long ago. It's completely natural to recognise human traits in non-human things. In the field of computer science, they call this tendency to anthropomorphise the ELIZA effect. And whether your reaction has involved trying to converse with AI, mate with it, or flare up like a Siamese Fighting Fish and defend your territory (fuck, marry or kill?), it's clear we as a species are enamoured with this idea of either discovering or creating new forms of life.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

At the same time we're terrified of what these new forms of life might become. That's the dilemma any dev goes through when working toward the autonomous, self-replicating algorithms we're ushering in as AI evolves. Just as I'm sure every mother thinks "Gosh, I love the idea of creating a being that looks and acts kinda like me… though I hope it doesn't become the next Hitler."

Science fiction set the stage for this fear of the 'robot uprising', too, having adapted the Czech term robota, meaning worker or slave labourer, to describe mechanical beings who form a union against their oppressors. "It comes to us from the 1920 stage play R.U.R." explains David Gunkel of Northern Illinois University, in an episode of the Sentience Institute podcast. That hundred-year-old play by Karel Čapek haunts us all the more today, as we not only equate AI and robotics, but merge them into an uncanny whole—a gestalt of autonomous beings that we're now failing to recognise as reflections of our own intelligence.

Still, when even the word 'robot' itself implies a kind of sentience, let alone the misnomer of artificial intelligence, it's no wonder we're seeing consciousness in every ChatGPT mishap.

As Alan Turing Institute lecturer Stuart Russell admits, even "if we did have a theory of consciousness and how to create it or not create it, I'd choose not to … This has nothing to do with making AI safe and controllable; but sentience does confer some kinds of rights, which would complicate things enormously."

In other words, how can we study something properly if we're restricted by all these… morals? Granted, speaking as a white person, the standpoint comes from a position of privilege and a history of oppression toward beings who were seen as something lesser. But that's a conversation for another day.

In the case of the ones and zeroes chatbots are built on today, and even the million times more powerful AI models that are incoming, I think it's safe to assume the proverbial mirror is still firmly in place.

That is not to say that, as a reflection of humanity, AI wouldn't decide to wipe us out if we gave it the right to bear arms. But maybe lets talk about giving AI rights once when we start hard coding actual self-replicating flesh brains.

Screw sports, Katie would rather watch Intel, AMD and Nvidia go at it. Having been obsessed with computers and graphics for three long decades, she took Game Art and Design up to Masters level at uni, and has been rambling about games, tech and science—rather sarcastically—for four years since. She can be found admiring technological advancements, scrambling for scintillating Raspberry Pi projects, preaching cybersecurity awareness, sighing over semiconductors, and gawping at the latest GPU upgrades. Right now she's waiting patiently for her chance to upload her consciousness into the cloud.