Total War: Warhammer benchmarks strike fear into CPUs

An upcoming DX12 patch will help.

Once more unto the breach

War. War never changes. Especially when the name of your franchise is Total War. Having spanned the millennia in previous games, Total War now looks toward a more fantastical setting, courtesy of Games Workshop's Warhammer universe. The series has received plenty of critical acclaim in the past, and the Warhammer release continues that pattern, which you can read about in our full review. Total War also has a reputation for being a perpetually punishing game when it comes to your PC's hardware. With potentially thousands of units on screen at a time in larger battles, you typically need not only a fast graphics card, but a capable CPU to go with it. There's a catch with the latest installment, as developer Creative Assembly has opted to support DirectX 12 to hopefully lighten the load and enable better performance. Here's where things get a bit intertwined and confusing.

The initial retail launch of Total War: Warhammer (TWW) does not currently support DX12 mode. The engine is sort of running in DX12 mode on Windows 10, as running a utility like FRAPS won't show you the frame rate overlay—that normally signifies the use of a low-level API like DirectX 12, Mantle, or Vulkan. However, in talking with Creative Assembly, the current public release still does all of the real work using DirectX 11, which is necessary if you want a game to work outside of Windows 10. Anyway, Creative Assembly partnered with AMD, with TWW sporting the usual "AMD Gaming Evolved" logo and startup video. As part of this collaboration, there will be a full DX12-compliant patch in the near future—we were told the goal is to release it sometime in June, but we'll see if that happens.

AMD provided us with a preview build of the DX12 version of the game, which conveniently includes a built-in benchmark—or rather, it's only a benchmark, so you can't actually play the game. Meanwhile, the retail launch doesn't include a built-in benchmark, at least not right now, meaning there's no way to directly compare performance from the two builds. But we did run some tests with a few graphics cards on the retail build, just to spot-check performance, and we've run our usual collection of graphics cards through the DX12 beta build. Frame rates were captured using the PresentMon utility, an alternative to FRAPS that works with low-level APIs like DX12.

CPU: Intel Core i7-5930K @ 4.2GHz

CPU: Intel Core i5-4690K @ 3.9GHz (simulated)

CPU: Intel Core i3-4360 @ 3.7GHz (simulated)

Mobo: Gigabyte GA-X99-UD4

RAM: G.Skill Ripjaws 16GB DDR4-2666

Storage: Samsung 850 EVO 2TB

PSU: EVGA SuperNOVA 1300 G2

CPU cooler: Cooler Master Nepton 280L

Case: Cooler Master CM Storm Trooper

OS: Windows 10 Pro 64-bit

Drivers: AMD Crimson 16.5.3, Nvidia 368.22 (386.16 for 1080)

As usual, then, that brings us to the question of what sort of hardware you'll need to play TWW. Before we get to the benchmarks, there are a few items to discuss. First, TWW is a strategy game, which means it's far more forgiving of low frame rates than games like Doom or Rise of the Tomb Raider. Second, as noted this is an AMD Gaming Evolved title, and while I don't personally believe a developer would intentionally sabotage performance on one set of hardware, I also don't think any game stamped with an AMD or Nvidia logo is going to be completely unbiased; at best, they've received a bit of help from the vendor while at worst they may heavily optimize for one architecture and not for others. Finally, as a DX12 test, that inherently means a vendor can access hardware at a lower level, which means vendor specific optimizations (or game design around certain hardware features) is even more likely. There's a lot of talk on the Internet about how AMD's architecture is "better suited" to DX12 than Nvidia's, but the number of DX12-enabled titles remains quite limited, and drawing conclusions from a few games—most of which have either an AMD or Nvidia logo—seems premature. But let's see how TWW performs.

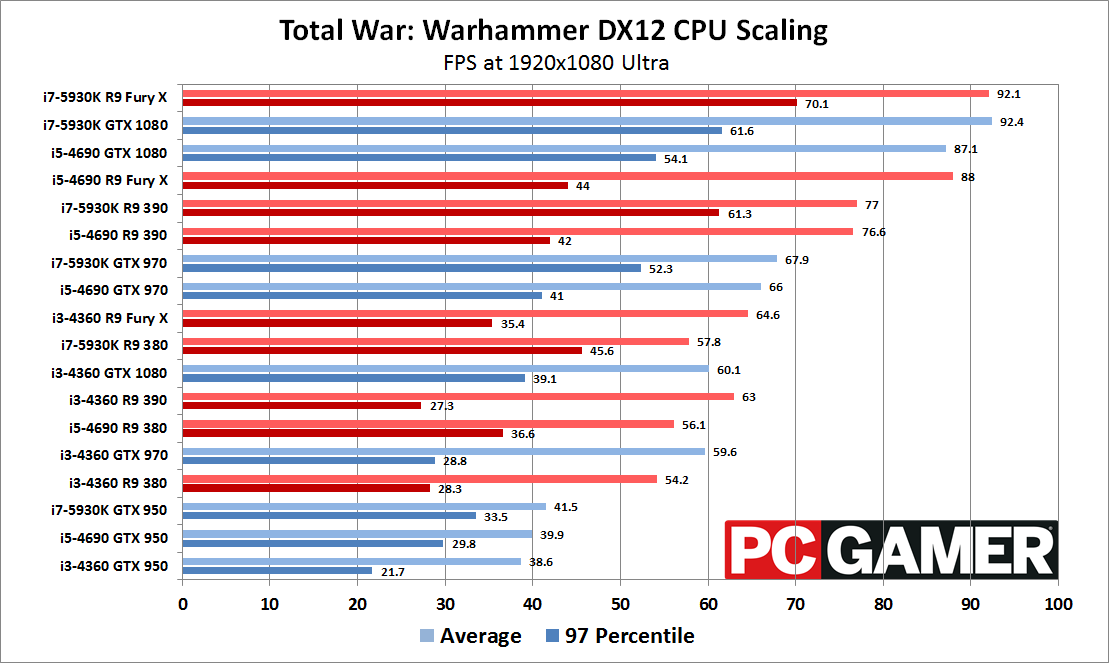

We picked fourteen cards, ranging from Nvidia's relatively affordable GTX 950 up through the not-quite-released GTX 1080, and AMD's R9 380 through R9 Fury X. We also checked dual-GPU performance with SLI GTX 970 and CrossFire R9 Nano. Our test bed hardware is the same as usual, an overclocked Core i7-4930K running at 4.2GHz. While it's not fully accurate (due to cache and platform differences), we also simulated a Core i3-4360 by disabling all but two of our CPU's cores and running it at 3.7GHz, and we simulated a stock-clocked i5-4690K by disabling two cores and Hyper-Threading and running the CPU at 3.9GHz. For CPU scaling, we used the GTX 1080, 970, and 950 along with AMD's R9 Fury X, 390, and 380.

We tested at five settings for this article: 1920x1080 Medium, High, and Ultra, along with 2560x1440 and 3840x2160 at Ultra. For CPU scaling, we confined testing to just 1920x1080 at Medium and Ultra quality. All of these presets control a variety of options, including anti-aliasing, but for ease of comparison we didn't adjust anything (other than ensuring V-Sync was turned off). We'll include image quality screenshots below for those who are interested. Let's begin by looking at the graphics cards.

Please note that our DX11 benchmarks are taken from a battle, where we repeat the same 60 second sequence for each card/CPU tested. This is a large-scale battle, and the position of the camera and what's on screen at any given time is important. Smaller battles and other parts of the game could very well post better frame rates, but we're looking at relative performance either way. If one GPU/CPU is faster in our test sequence, it should also be faster elsewhere.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Total GPU Hammer

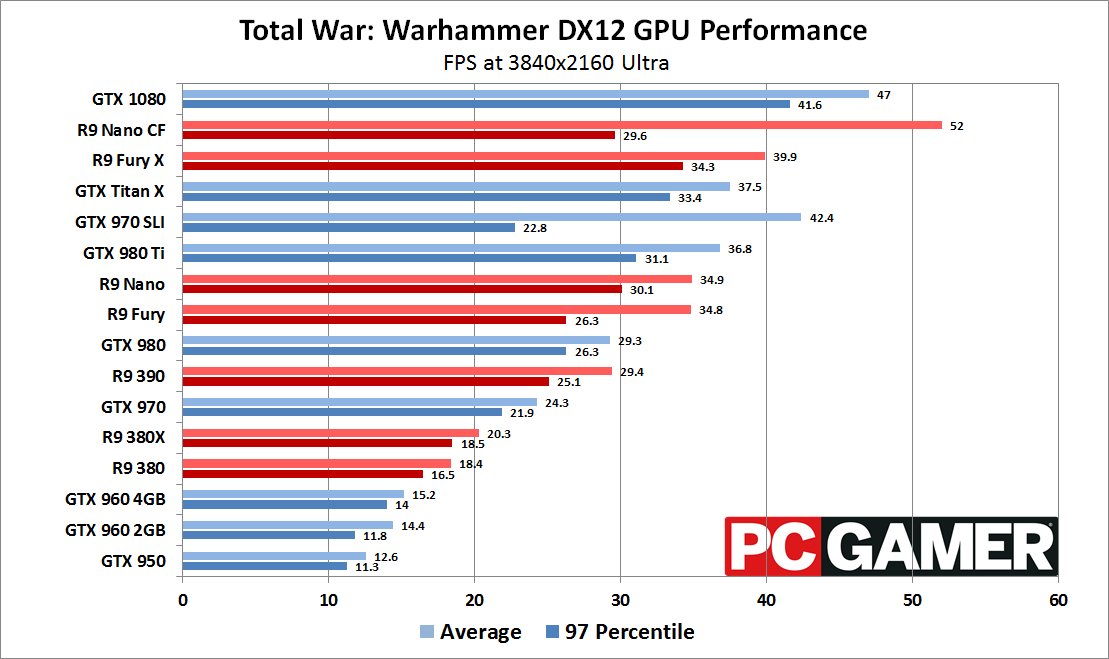

Starting at the top, if you're looking to run Total Warhammer at 4K Ultra, you're going to need some impressive hardware, which is typically the case for most games at these settings. Using the current retail build (as of 5/24/2016), only a single GPU manages to break 30 fps, and that's the GTX 1080 Founders Edition—a $699 card you can't buy until Friday. Ouch.

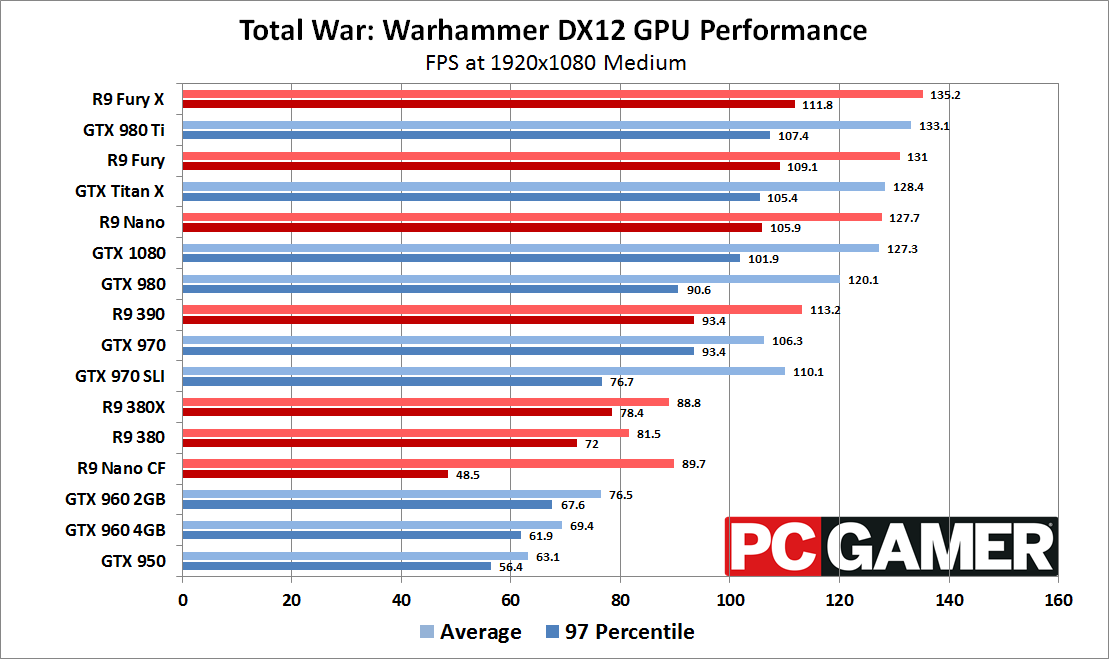

Okay, that's not quite the only card to break 30 fps, as the Titan X and 980 Ti barely manage to do so as well (we measured 31.4 fps on the Titan X). For AMD, even their fastest card, the R9 Fury X, only nets 27 fps. But this is all on the DX11 version of the game, running a demanding sequence; what happens with DX12? It's an entirely different landscape.

GTX 1080 maintains the top slot by virtue of its higher minimum frame rates, though the CrossFire R9 Nano has slightly faster average frame rates. The Fury X, Nano, and vanilla R9 Fury all break the 30 fps mark, along with the 980 Ti, and Titan X. TWW also supports explicit multi-GPU rendering in DX12 mode—SLI/CF aren't supported in DX11 mode, which is why we didn't include SLI/CF in that chart—so you can use two AMD or Nvidia GPUs together. At 4K Ultra, the second GPU definitely helps, though minimum frame rates don't really improve at all. Also note that TWW does not have the same multi-adapter support as Ashes of the Singularity, so the GPUs need to be matched (at least, that's what we're told; we didn't try mixing and matching GPUs).

And if you're a stickler for getting smooth 60+ fps performance at 4K in all areas, you'll still need the DX12 patch, and even then you'll have to buy a GTX 1080 and drop to High or Medium quality to get there. The R9 Fury X falls just short at 4K Medium, with 57 fps.

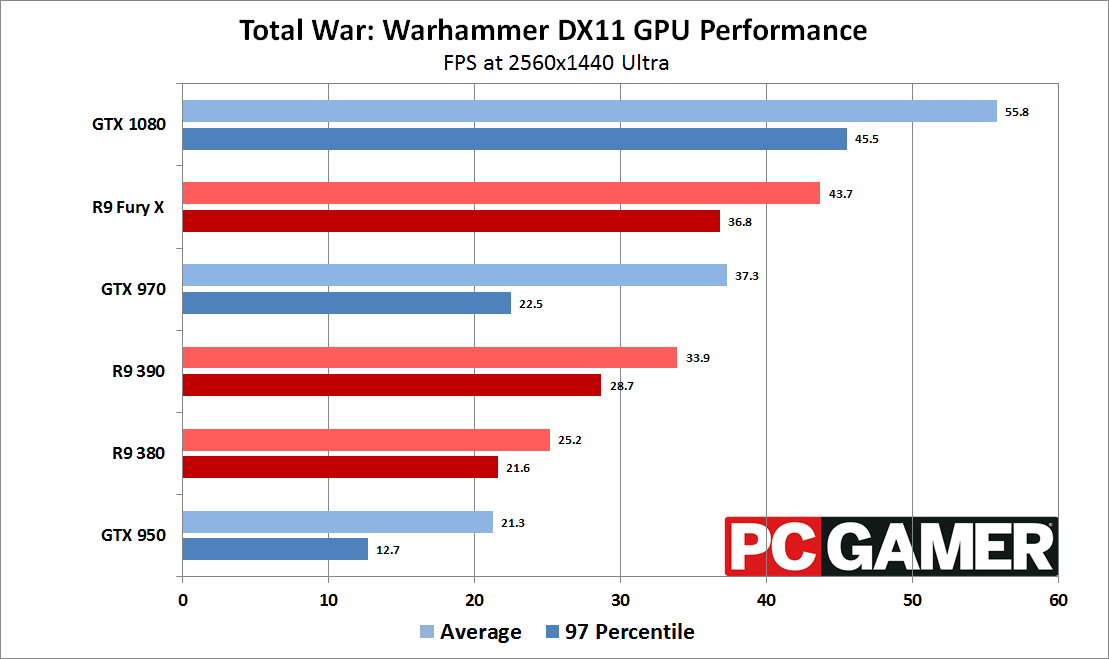

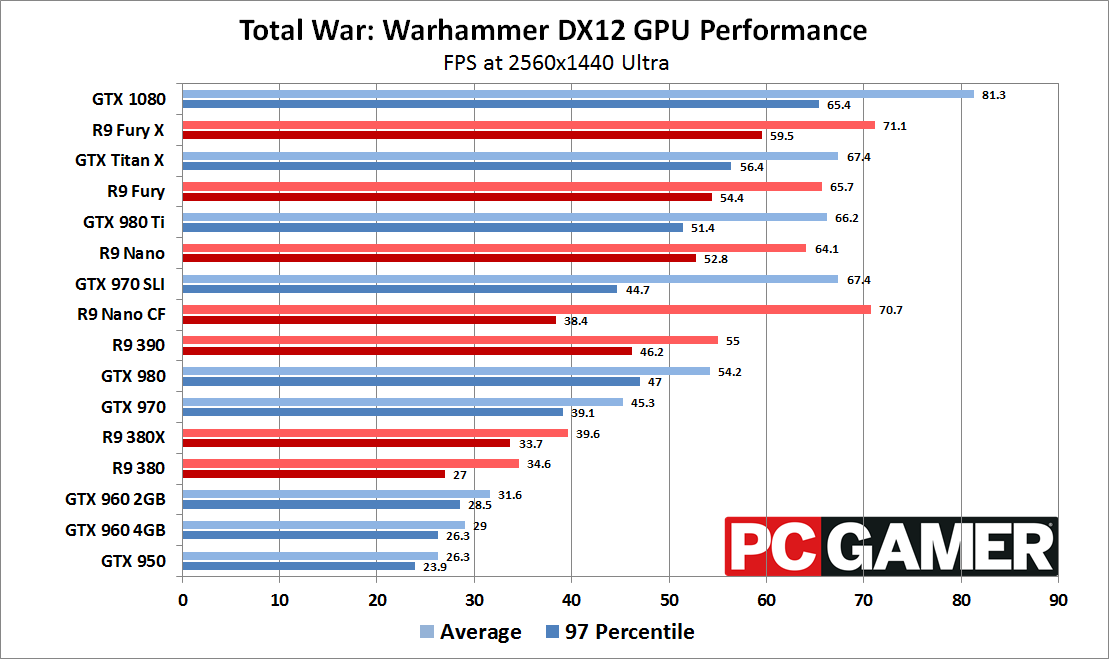

4K Ultra is a bit much for most users, while 1440p tends to be far more approachable. The current DX11 retail release still can't breach 60 fps (in our test sequence) with a GTX 1080, but R9 390 and GTX 970 and above can at least reach a relatively smooth 30+ fps. The 980 Ti/Titan X sit around the 50 fps mark, if you're wondering, which indicates many of our GPUs are hitting CPU bottlenecks; we'll get into that more below, but this is basically a poster child game for benefiting from DX12 optimizations.

With DX12 in play, things improve on all the cards—and again, please remember that the benchmark sequence for DX11 isn't the same, so we're not able to say for certain that DX12 is 50-75 percent faster. Nearly everything is breaking the 30 fps mark, though the GTX 950 still comes up short. AMD cards benefit more in our limited testing of DX11 performance, with the R9 390 for example beating both the GTX 970 and 980, whereas it's basically tied with the 970 in the retail version of the game. At the lower end of the spectrum, however, AMD's 380 is still easily beating the GTX 950 and 960.

Something else to point out is that the 4GB 960 doesn't seem to benefit from the added VRAM compared to the 2GB 960, which is a bit surprising. There are reasons for this, however. Both are EVGA cards, but the 2GB 960 is an SSC model with ACX2.0 cooler, and it's clocked five percent higher than the 4GB 960. The 4GB card meanwhile is a compact SC model with a single fan, and EVGA gives it a 128W TDP vs. 160W. At 4K Ultra, the added VRAM helped a little bit, but at all of our other test settings, the 2GB card wins out.

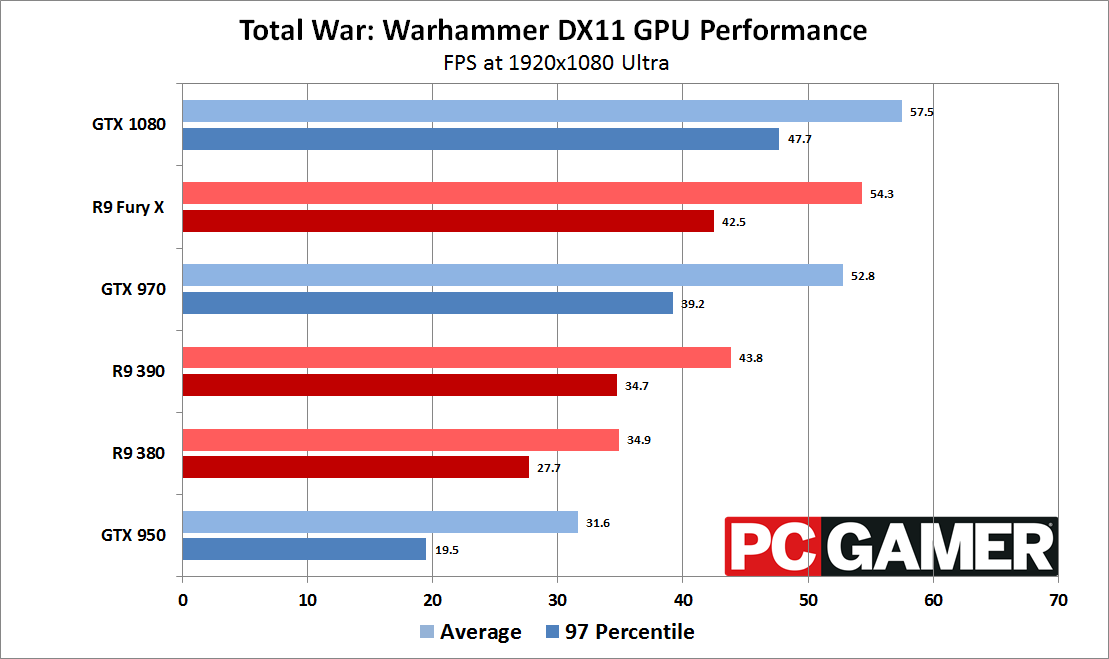

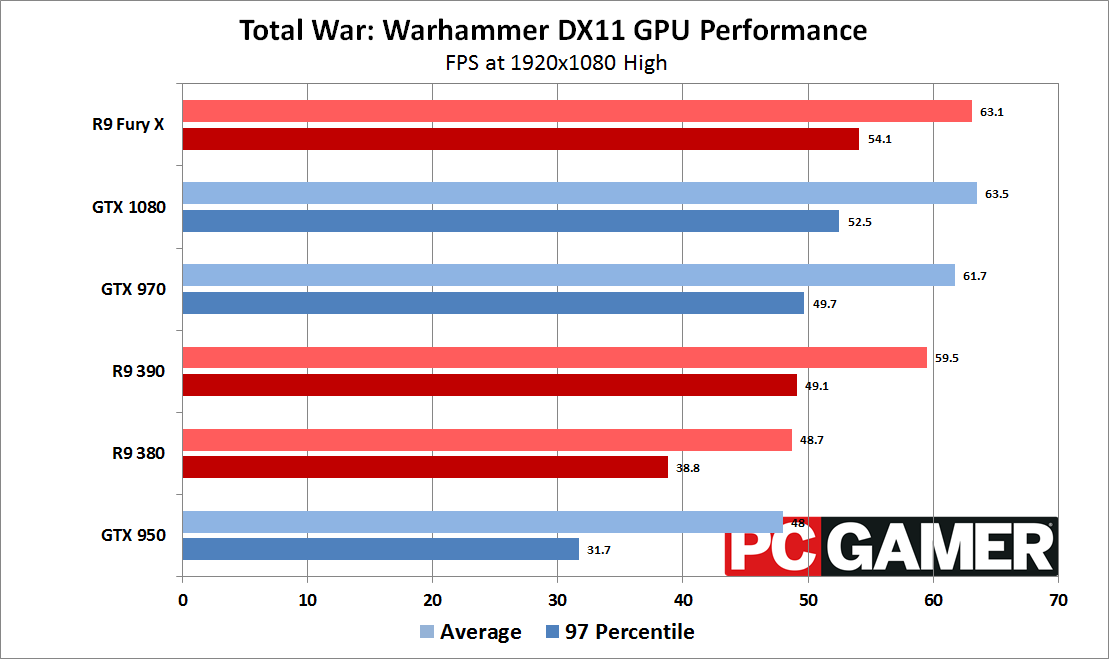

Leaving behind the higher resolution realms, 1080p Ultra finally proves playable on all the test GPUs—though the GTX 950 barely breaks 30 fps in DX11 right now. It's also concerning to see the retail release underperforming on AMD GPUs—that's really the only way to describe it. The R9 380 beats the GTX 950 by about 10 percent, but in our overall GPU rankings, the gap averages 45 percent, so this is well under par. Likewise, the GTX 970 is actually beating the 390 by 20 percent, where normally we see the 390 leading by 20 percent. Fury X fares better, however, trailing the Titan X and 980 Ti by just five percent. So if AMD helped Creative Assembly with Total Warhammer, it appears the lion's share of their work went to the DX12 path.

DirectX 12 shifts everything around, though here we start to encounter other bottlenecks. The GTX 1080 for example falls behind several cards (thanks to a slightly lower than expected minimum fps result), so TWW seems to be hitting CPU limits with DX12. The CrossFire/SLI results back that line of thinking up, with the 970 SLI gaining only slightly on the single 970, while Nano CrossFire is well off the pace set by a single Nano. Everything else is more or less in line with what we'd expect, but clearly there are other elements in play beyond pure GPU performance. But for this sort of game, everything is doing reasonably well at 1080p Ultra.

If your hardware is slower than our weakest GPUs—and let's be honest, the GTX 950 isn't exactly a weak-sauce graphics chip, though you can find the cards for as little as $120 after rebate these days—you might need to aim even lower. At 1080p High, the GTX 950 is pulling 48 fps, which means things like a GTX 760 and HD 7850 (R7 265) and above should also be playable. And once the DX12 patch goes public, nearly all of the cards we tested move into the 60+ fps range. Note that SLI and CrossFire both reduce performance now, indicating CPU limits or other bottlenecks, but a pair of older GPUs might still benefit.

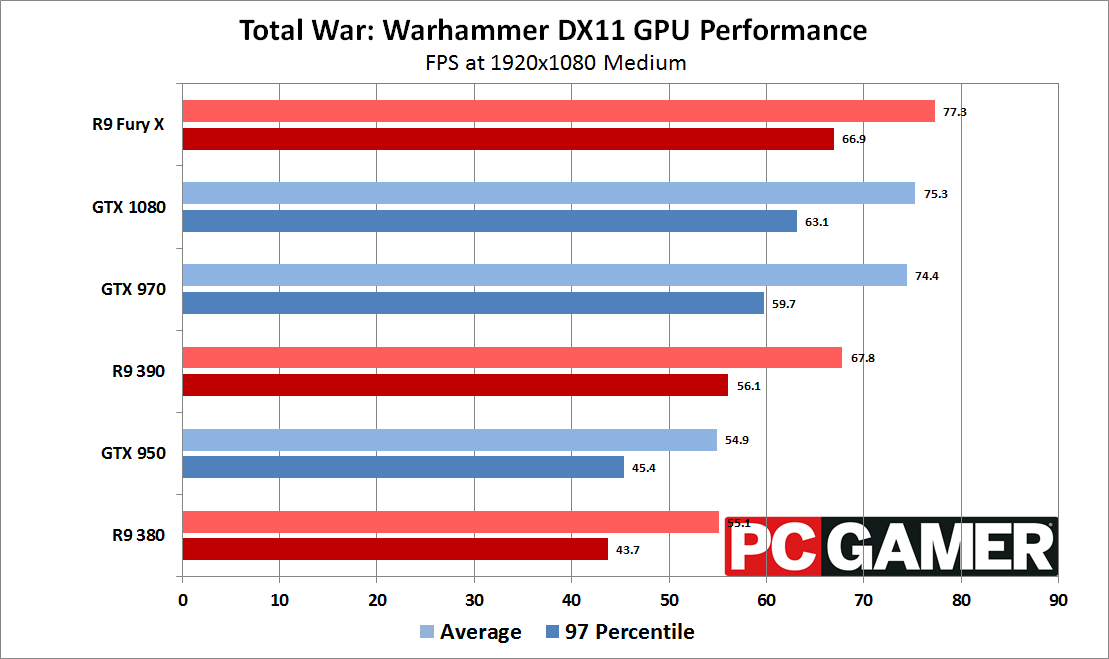

Finally, at 1080p Medium quality, you can get an additional 10-20 percent performance boost over Medium, and the game still looks pretty good. The number of character models you'll see in each formation drops at both the High and Medium settings, and Low takes it a step further, if you really need it—we measured 97 fps on a GTX 960 at Low for example, and there are still many knobs you could turn down. The DX11 retail release meanwhile stalls at around 75 fps, even with a high-end GPU and CPU running the show. That makes us wonder what might happen if you didn't have a 6-core overclocked processor.

Your CPU is totally getting hammered

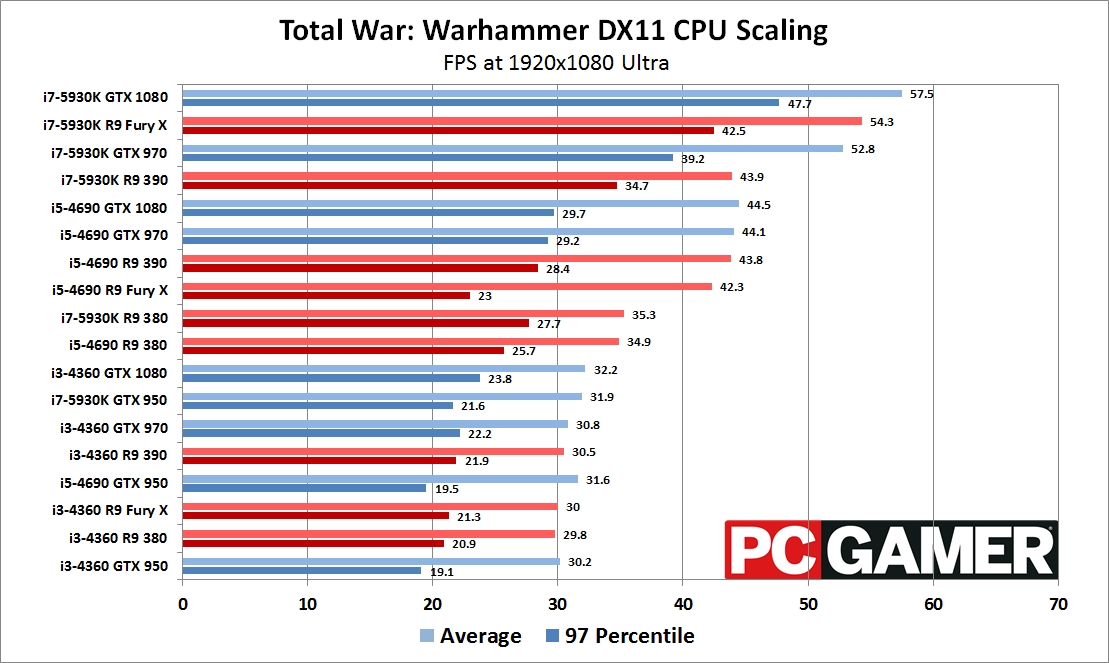

We saw indications in our GPU testing that processor performance can be a concern, which is pretty common with the Total War series. With hundreds and potentially even thousands of units visible on the screen at once, your CPU gets a real workout—particularly in the current retail release prior to DX12 getting patched in. Just how bad is it?

While we'd love to throw in more CPUs, we've opted to stick with Intel for ease of testing; we're simulating the slower CPUs rather than swapping platforms. The X99 platform with a dual-core + Hyper-Threading or a quad-core without Hyper-Threading processor isn't quite the same as an actual Haswell (or Skylake) Core i3/i5 part, but we should at least be able to find out if your CPU matters. In Doom, it wasn't really a critical element; Warhammer on the other hand…well, take a look:

In the retail DX11 game, using a demanding battle, the only graphics card that doesn't take a substantial hit to performance at 1080p Ultra is the GTX 950, and it still loses five percent on average frame rate, with a slightly higher 10 percent drop to minimum fps. The R9 380 falls by 15 percent, the 390 drops 30 percent, and the 970, Fury X, and GTX 1080 are all over 40 percent slower. That's going from the overclocked i7-5930K to the i3-4360, however; if you have a decent quad-core i5 processor, even running stock clocks (3.9GHz), most GPUs will be pretty close to maximum performance—but the 970 still improves by 20 percent moving from the i5 to the i7, while the GTX 1080 and Fury X improve by nearly 30 percent. If you have a high-end GPU, you'll basically want an overclocked (K-model) Core i5 at the very least to reach maximum performance in TWW.

The DX12 benchmark beta does better, with the 380 and 950 dropping less than 10 percent going from the i7 to the i3; faster GPUs like the 970 and 390 still lose about 15 percent performance, while the GTX 1080 and Fury X lose 35 and 30 percent, respectively. As before, that's going from 6-core to 2-core, plus a 12 percent reduction in CPU clocks. The Core i5 is far closer this round, with the biggest single improvement being on the GTX 1080, a six percent increase in average frame rates. Given the CPU clock is about seven percent higher on the i7-5930K OC, it looks like most of the difference is due to clock speeds and not core counts. Minimum frame rates however are still showing sizable improvements, often 50 percent or more, so if you value a stutter-free strategy gaming experience, even with the DX12 patch you may want to run a Core i7 processor with TWW.

If you were hoping Medium quality would lessen the CPU requirements, that doesn't really seem to be the case. The retail version slams into a pretty hard limit of around 35 fps with the i3-4360, even with the fastest GPUs available. The i5-4690 is able to hit nearly 60 fps, with only the GTX 950 falling short, so about 60-70 percent better performance thanks to a small increase in clock speed plus twice the number of physical CPU cores. There's a limit to scaling with cores, however, as the 6-core i7-5930K adds up to 30-35 percent performance, but that's with a 50 percent increase in core counts plus a clock speed boost. Again, Total Warhammer is definitely a game that can benefit from anything that will reduce the CPU load.

Shifting to the DX12 benchmark, the R9 380 and GTX 950 hardly benefit in average frame rates—though minimums are still far lower with the Core i3 processor. The Core i5 again gets you most of where you need to go, at least for single GPUs, improving the 390 and 970 performance by around 40 percent, while GTX 1080 gains 55 percent and the Fury X goes up 65 percent. The Core i7-5930K further improves on things by adding 13 percent on the GTX 1080, with minimums on all of the cards still showing anywhere from 35-75 percent increases.

Can't touch this

In summary then, much like the game itself, in terms of hardware Total War: Warhammer is a rehash of what we've seen in previous Total War games, but DX12 adds an interesting twist. Unfortunately, just as we're still waiting to see the public release of Doom's Vulkan API support, right now all of the DX12 testing is still in beta. That should change in the coming weeks, and long-term TWW and the Total War series in general stand to benefit greatly from the move. But until all of this goes public and we can run apples-to-apples benchmarks using both DX12 and DX11, what we're left with is the promise of good things to come.

For those playing the game in its current state, just know this: you will want a beefy CPU, and even then, consistently hitting 60 fps may not be possible without a healthy overclock. Our 4.2GHz i7-5930K comes up short at 1080p Ultra, but a Skylake i7-6700K at 4.7GHz might get there. Or you can wait for the DX12 patch, which at present looks to improve frame rates by 50 percent or more.

A note on affiliates: some of our stories, like this one, include affiliate links to stores like Amazon. These online stores share a small amount of revenue with us if you buy something through one of these links, which helps support our work evaluating PC components.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.